by Gary Mintchell | Feb 19, 2020 | Uncategorized

While at the Hannover Messe Preview last week in Germany, I talked with the representatives of a German consortium with the interesting name of “it’s OWL”. Following are some thoughts from the various organizations that compose the consortium.

Intelligent production and new business models

Artificial Intelligence is of crucial importance for the competitiveness of industry. In the Leading-Edge Cluster it’s OWL six research institutes cooperate with more than 100 companies to develop practical solutions for small and medium-sized businesses. At the OWL joint stand (Hall 7, A12) over 40 exhibitors will demonstrate applications in the areas of machine diagnostics, predictive maintenance, process optimization, and robotics.

Prof. Dr. Roman Dumitrescu (Managing Director it’s OWL Clustermanagement GmbH and Director Fraunhofer IEM) explains: “Our research institutes are international leaders in the fields of machine learning, cognitive assistance systems and systems engineering. At our four universities and two Fraunhofer Institutes, 350 researchers are working on over 100 projects to make Artificial Intelligence usable for applications in industrial value creation. With it’s OWL, we bring this expert knowledge into practice. In 2020, we will launch three new strategic initiatives worth 50 million € to unlock the potential for AI in production, product development and the working world for small and medium-sized enterprises.”

In the initiative ‘AI Marketplace’ 20, research institutes and companies are developing a digital platform for Artificial Intelligence in product development. Providers, users, and experts can network and develop solutions on this platform. In the competence centre ‘AI in the working world of industrial SMEs’, 25 partners from industry and science make their knowledge of work structuring in the context of AI available to companies.

Learning machine diagnostics and ‘SmartBox’ for process optimization

The Institute for Industrial Information Technology at the OWL University of Applied Sciences and Arts will present new results for intelligent machine diagnostics at the trade fair. Using a three-phase motor, it will be illustrated how learning algorithms and information fusion can be used to reliably identify, predict, and visualize states of technical systems. Patterns and information hidden in time series signals are learned and presented to the user in an understandable way. Inaccuracies and uncertainties in individual sensors are solved by conflict-reducing information fusion. For example, motors can be used as sensors. Within a network of sensors and other data sources in production plants, motors can measure the “state of health” and analyze the causes of malfunctions via AI. This reduces scrap and saves up to 20 percent in materials.

The ‘SmartBox’ of the Fraunhofer Institute IOSB-INA is a universally applicable solution that identifies anomalies in processes in various production environments on the basis of PROFI-NET data. The solution requires no configuration and learns the process behavior.

With retrofitting solutions of the Fraunhofer Institute, companies can prepare machines and systems in their inventory for Industrie 4.0 applications without major investment expenditure. The spectrum ranges from mobile production data acquisition systems in suitcase format for studies of potential to permanently installable retrofit solutions. Intelligent sensor systems, cloud connections and machine learning methods build the basis for data analysis. This way, processes can be optimised and more transparency, control, planning, safety, and flexibility in production can be achieved.

Cognitive robotics and self-healing in autonomous systems

The Institute of Cognition and Robotics (CoR-Lab) presents a cognitive robotics system for highly flexible industrial production. The potential of model-driven software and system development for cognitive robotics is demonstrated by using the example of automated terminal assembly in switch cabinet construction. For this purpose, machine learning methods for environ- mental perception and object recognition, automated planning algorithms and model-based motion control are integrated into a robotic system. The cell operator is thereby enabled to perform different assembly tasks using reusable and combinable task blocks.

The research project “AI for Autonomous Systems” of the Software Innovation Campus Paderborn aims at achieving self-healing properties of autonomous technical systems based on the principles of natural immune systems. For this purpose, anomalies must be detected at runtime and the underlying causes must be independently diagnosed. Based on the localization it is necessary to plan and implement behavioral adjustments to restore the function. In addition, the security of the systems must be guaranteed at all times and system reliability must be increased. This requires a combination of methods of artificial intelligence, machine learning and biologically inspired algorithms.

Predictive maintenance and digital twin

Within the framework of the ‘BOOST 4.0’ project, the largest European initiative for Big Data in industry, it’s OWL is working with 50 partners from 16 countries on various application scenarios for Big Data in production. it’s OWL focuses on predictive maintenance: thanks to the systematic collection and evaluation of machine data from a hydraulic press and a material conveyor system, it is possible to identify patterns in the production process in a pilot company. The Fraunhofer IEM has provided the technological and methodological basis. And successfully so: over the past two years the prediction of machine failures has been significantly improved in this specific application by means of machine learning methods. The Mean Time To Repair (MTTR) has already been reduced by more than 30 percent. The Mean Time Between Failures (MTBF) is now six times longer than before. A model of the predictive production line can be seen at the stand.

The digital twin is an important prerequisite for increasing the potential for efficiency and productivity in all phases of the machine life cycle. Companies and research institutes are working on the technical infrastructure for digital twins in an it’s OWL project. Digital descriptions and sub-models of machines, products and equipment as well as their interaction over the entire life cycle are now accessible thanks to interoperability. Requirements from the fields of energy and production technology as well as existing Industrie 4.0 standards and IT systems are taken into account. This is expected to result in potential savings of over 50 percent. At the joint stand, Lenze and Phoenix Contact will use typical machine modules to demonstrate how digital twins can be used to exchange information between components, machines, visualisations and digital services across manufacturers. Interoperability proves for the first time how the combination of data can be used to create useful information with added value for different user groups. For example, machine operators and maintenance staff can detect anomalies and receive instructions for troubleshooting.

Connect and get started – production optimization made easy

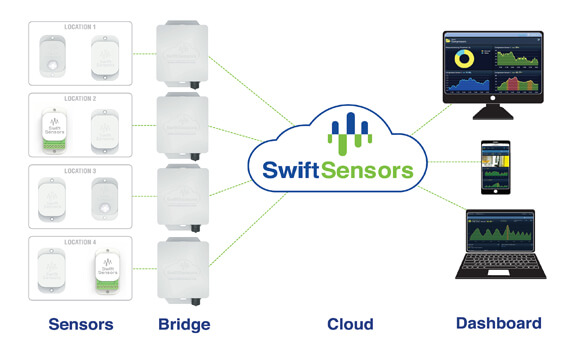

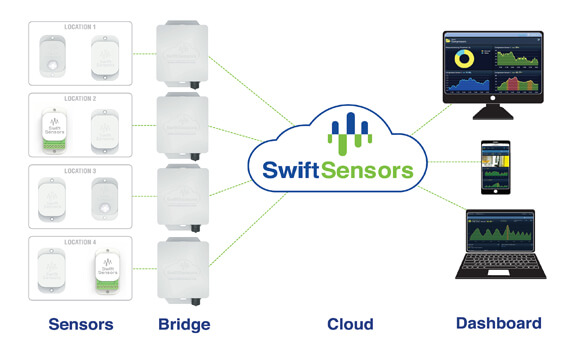

The cooperation in the Leading-Edge Cluster gives rise to new business ideas that are developed into successful start-ups. For example, Prodaso—a spin-off from Bielefeld University of Applied Sciences—has developed a simple and quickly implementable solution for the acquisition and visualization of machine and production data. The hardware can be connected to a machine in a few minutes via plug-and-play. The machine data is displayed directly in the cloud.

Prodaso has succeeded in solving a central challenge: Until now, networking machines from different manufacturers have been complex and costly. The Prodaso system can be retrofitted to all existing systems, independent of manufacturer and interface. In addition, the start- up also provides automated analysis and optimization tools. This enables companies to detect irregularities and deviations in the process flow at an early stage and to initiate appropriate measures. The company, founded in 2019, has already connected approximately 100 machines at companies in the manufacturing industry.

by Gary Mintchell | Oct 24, 2019 | Technology

Cray, an HPE company, held a panel discussion webinar on October 18 to discuss Exascale (10^18, get it?) supercomputing. This is definitely not in my area of expertise, but it is certainly interesting.

Following is information I gleaned from links they sent to me. Basically, it is Why Supercomputing. And not only computers, but also networking to support them.

Today’s science, technology, and big data questions are bigger, more complex, and more urgent than ever. Answering them demands an entirely new approach to computing. Meet the next era of supercomputing. Code-named Shasta, this system is our most significant technology advancement in decades. With it, we’re introducing revolutionary capabilities for revolutionary questions. Shasta is the next era of supercomputing for your next era of science, discovery, and achievement.

WHY SUPERCOMPUTING IS CHANGING

The kinds of questions being asked today have created a sea-change in supercomputing. Increasingly, high-performance computing systems need to be able to handle massive converged modeling, simulation, AI, and analytics workloads.

With these needs driving science and technology, the next generation of supercomputing will be characterized by exascale performance, data-centric workloads and diversification of processor architectures.

SUPERCOMPUTING REDESIGNED

Shasta is that entirely new design. We’ve created it from the ground up to address today’s diversifying needs.

Built to be data-centric, it runs diverse workloads all at the same time. Hardware and software innovations tackle system bottlenecks, manageability, and job completion issues that emerge or grow when core counts increase, compute node architectures proliferate, and workflows expand to incorporate AI at scale.

It eliminates the distinction between clusters and supercomputers with a single new system architecture, enabling a choice of computational infrastructure without tradeoffs. And it allows for mixing and matching multiple processor and accelerator architectures with support for our

new Cray-designed and developed interconnect we call Slingshot.

EXASCALE-ERA NETWORKING

Slingshot is our new high-speed, purpose-built supercomputing interconnect. It’s our eighth generation of scalable HPC network. In earlier Cray designs, we pioneered the use of adaptive routing, pioneered the design of high-radix switch architectures, and invented a new low-diameter system topology, the dragonfly.

Slingshot breaks new ground again. It features Ethernet capability, advanced adaptive routing, first-of-a-kind congestion control, and sophisticated quality-of-service capabilities. Support for both IP-routed and remote memory operations broadens the range of applications beyond traditional modeling and simulation.

Quality-of-service and novel congestion management features limit the impact to critical workloads from other applications, system services, I/O traffic, or co-tenant workloads. Reduction in the network diameter from five hops (in the current Cray XCTM generation) to three reduces cost, latency, and power while improving sustained bandwidth and reliability.

FLEXIBILITY AND TCO

As your workloads rapidly evolve, the ability to choose your architecture becomes critical. With Shasta, you can incorporate any silicon processing choice — or a heterogenous mix — with a single management and application development infrastructure. Flex from single to multi-socket nodes, GPUs, FPGAs, and other processing options that may emerge, such as AI-specialized accelerators.

Designed for a decade or more of work, Shasta also eliminates the need for frequent, expensive upgrades, giving you exceptionally low total

cost of ownership. With its software architecture you can deploy a workflow and management environment in a single system, regardless of packaging.

Shasta packaging comes in two options: a 19” air- or liquid-cooled, standard datacenter rack and a high-density, liquid-cooled rack designed to take 64 compute blades with multiple processors per blade.

Additionally, Shasta supports processors well over 500 watts, eliminating the need to do forklift upgrades of system infrastructure to accommodate higher-power processors.

by Gary Mintchell | Sep 25, 2019 | Data Management, Internet of Things, Manufacturing IT

DataOps—a phrase I had not heard before. Now I know. Last week while I was in California I ran into John Harrington, who along with other former Kepware leaders Tony Paine and Torey Penrod-Cambra, had left Kepware following its acquisition by PTC to found a new company in the DataOps for Industry market. The news he told me about went live yesterday. HighByte announced that its beta program for HighByte Intelligence Hub is now live. More than a dozen manufacturers, distributors, and system integrators from the United States, Europe, and Asia have already been accepted into the program and granted early access to the software in a exchange for their feedback.

Intelligence Hub

HighByte Intelligence Hub will be the company’s first product to market since incorporating in August 2018. HighByte launched the beta program as part of its Agile approach to software design and development. The aim of the program is to improve performance, features, functionality, and user experience of the product prior to its commercial launch later this year.

HighByte Intelligence Hub belongs to a new classification of software in the industrial market known as DataOps solutions. HighByte Intelligence Hub was developed to solve data integration and security problems for industrial businesses. It is the only solution on the market that combines edge operations, advanced data contextualization, and the ability to deliver secure, application-specific information. Other approaches are highly customized and require extensive scripting and manual manipulation, which cannot scale beyond initial requirements and are not viable solutions for long-term digital transformation.

“We recognized a major problem in the market,” said Tony Paine, Co-Founder & CEO of HighByte. “Industrial companies are drowning in data, but they are unable to use it. The data is in the wrong place; it is in the wrong format; it has no context; and it lacks consistency. We are looking to solve this problem with HighByte Intelligence Hub.”

The company’s R&D efforts have been fueled by two non-equity grants awarded by the Maine Technology Institute (MTI) in 2019. “We are excited to join HighByte on their journey to building a great product and a great company here in Maine,” said Lou Simms, Investment Officer at MTI. “HighByte was awarded these grants because of the experience and track record of their founding team, large addressable market, and ability to meet business and product milestones.”

To further accelerate product development and go-to-market activities, HighByte is actively raising a seed investment round. For more information, please contact [email protected].

Learn more about the HighByte founding team —All people I’ve know for many years in the data connectivity business.

Background

From Wikipedia: DataOps is an automated, process-oriented methodology, used by analytic and data teams, to improve the quality and reduce the cycle time of data analytics. While DataOps began as a set of best practices, it has now matured to become a new and independent approach to data analytics. DataOps applies to the entire data lifecycle from data preparation to reporting, and recognizes the interconnected nature of the data analytics team and information technology operations.

DataOps incorporates the Agile methodology to shorten the cycle time of analytics development in alignment with business goals.

DataOps is not tied to a particular technology, architecture, tool, language or framework. Tools that support DataOps promote collaboration, orchestration, quality, security, access and ease of use.

From Oracle, DataOps, or data operations, is the latest agile operations methodology to spring from the collective consciousness of IT and big data professionals. It focuses on cultivating data management practices and processes that improve the speed and accuracy of analytics, including data access, quality control, automation, integration, and, ultimately, model deployment and management.

At its core, DataOps is about aligning the way you manage your data with the goals you have for that data. If you want to, say, reduce your customer churn rate, you could leverage your customer data to build a recommendation engine that surfaces products that are relevant to your customers — which would keep them buying longer. But that’s only possible if your data science team has access to the data they need to build that system and the tools to deploy it, and can integrate it with your website, continually feed it new data, monitor performance, etc., an ongoing process that will likely include input from your engineering, IT, and business teams.

Conclusion

As we move further along the Digital Transformation path of leveraging digital data to its utmost, this looks to be a good tool in the utility belt.

by Gary Mintchell | Mar 27, 2018 | Automation, Internet of Things, Wireless

by Gary Mintchell | Jan 30, 2018 | Internet of Things

I’m all about IoT and digitalization anymore. This is the next movement following the automation trend I championed some 15 years ago.

Last month, I started receiving emails about predictions for 2018. Not my favorite topic, but I started saving them. Really only received a couple good ones. Here they are—one from Cisco and one from FogHorn Systems.

Cisco Outlook

From Cisco blog written by Cisco’s SVP of Internet of Things (IoT) and Applications Division, Rowan Trollope, comes several looks at IoT from a variety of angles. There is more at the blog. I encourage you to visit for more details.

Until now, the Internet-of-Things revolution has been, with notable outlier examples, largely theoretical and experimental. In 2018, we expect that many existing projects will show measurable returns, and more projects get launched to capitalize on data produced by billions of new connected things.

With increased adoption there will be challenges: Our networks were not built to support the volumes and types of traffic that IoT generates. Security systems were not originally designed to protect connected infrastructure against IoT attacks. And managing industrial equipment that is connected to traditional IT requires new partnerships.

I asked the leaders of some of the IoT-focused teams at Cisco to describe their predictions for the coming year, to showcase some of these changes. Here they are.

IoT Data Becomes a Bankable Asset

In 2018, winning with IoT will mean taking control of the overwhelming flood of new data coming from the millions of things already connected, and the billions more to come. Simply consolidating that data isn’t the solution, neither is giving data away with the vague hope of achieving business benefits down the line. Data owners need to take control of their IoT data to drive towards business growth. The Economist this year said, “Data is the new oil,” and we agree.

This level of data control will help businesses deliver new services that drive top-line results.

– Jahangir Mohammed, VP & GM of IoT, Cisco

AI Revolutionizes Data Analytics

In 2018, we will see a growing convergence between the Internet of Things and Artificial Intelligence. AI+IoT will lead to a shift away from batch analytics based on static datasets, to dynamic analytics that leverages streaming data.

Typically, AI learns from patterns. It can predict future trends and recommend business-critical actions. AI plus IoT can recommend, say, when to service a part before it fails or how to route transit vehicles based on constantly-changing data.

– Maciej Kranz, VP, Strategic Innovation at Cisco, and author of New York Times bestseller, Building the Internet of Things

Interoperable IoT Becomes the Norm

The growth of devices and the business need for links between them has made for a wild west of communications in IoT. In 2018, a semblance of order will come to the space.

With the release of the Open Connectivity Foundation (OCF) 1.3 specification, consumer goods manufacturers can now choose a secure, standards-based approach to device-to-device interactions and device-to-cloud services in a common format, without having to rely on, or settle for, a proprietary device-to-cloud ecosystem.

Enterprise IoT providers will also begin to leverage OCF for device-to-device communications in workplace and warehouse applications, and Open Mobile Alliance’s Lightweight Machine-to-Machine (LwM2M) standard will take hold as the clear choice for remote management of IoT devices.

In Industrial IoT, Open Process Communication’s Unified Architecture (OPC-UA) has emerged as the clear standard for interoperability, seeing record growth in adoption with over 120 million installs expected as 2017 draws to an end. It will continue to grow into new industrial areas in 2018 driven by support for Time Sensitive Networking.

– Chris Steck, Head of Standardization, IoT & Industries, Cisco

IoT Enables Next-Gen Manufacturing

Manufacturing is buzzing about Industrie 4.0, the term for a collection of new capabilities for smart factories, that is driving what is literally the next industrial revolution. IoT technologies are connecting new devices, sensors, machines, and other assets together, while Lean Six Sigma and continuous improvement methodologies are harvesting value from new IoT data. Early adopters are already seeing big reductions in equipment downtime (from 15 to 95%), process waste and energy consumption in factories.

– Bryan Tantzen, Senior Director, Industry Products, Cisco

Connected Roadways Lay the Groundwork for Connected Cars

Intelligent roadways that sense conditions and traffic will adjust speed limits, synchronize street lights, and issue driver warnings, leading to faster and safer trips for drivers and pedestrians sharing the roadways. As these technologies are deployed, they become a bridge to the connected vehicles of tomorrow. The roadside data infrastructure gives connected cars a head start.

Connected cities will begin using machine learning (ML) to strategically deploy emergency response and proactive maintenance vehicles like tow trucks, snow plows, and more.

– Bryan Tantzen, Senior Director, Industry Products, Cisco

Botnets Make More Trouble

Millions of new connected consumer devices make a nice attack surface for hackers, who will continue to probe the connections between low-power, somewhat dumb devices and critical infrastructure.

The biggest security challenge I see is the creation of Distributed Destruction of Service (DDeOS) attacks that employ swarms of poorly-protected consumer devices to attack public infrastructure through massively coordinated misuse of communication channels.

IoT botnets can direct enormous swarms of connected sensors like thermostats or sprinkler controllers to cause damaging and unpredictable spikes in infrastructure use, leading to things like power surges, destructive water hammer attacks, or reduced availability of critical infrastructure on a city or state-wide level.

– Shaun Cooley, VP and CTO, Cisco

Blockchain Adds Trust

Cities are uniquely complex connected systems that don’t work without one key shared resource: trust.

From governmental infrastructure to private resources, to financial networks, to residents and visitors, all of a city’s constituents have to trust, for example, that the roads are sound and that power systems and communication networks are reliable. Those working on city infrastructure itself can’t live up to this trust without knowing that they are getting accurate data. With the growth of IoT, the data from sensors, devices, people, and processes is getting increasingly decentralized—yet systems are more interdependent than ever.

As more cities adopt IoT technologies to become smart—thus relying more heavily on digital transactions to operate—we see blockchain technology being used more broadly to put trust into data exchanges of all kinds. A decentralized data structure that monitors and verifies digital transactions, blockchain technology can ensure that each transaction—whether a bit of data streaming from distributed air quality sensors, a transaction passing between customs agencies at an international port, or a connection to remote digital voting equipment—be intact and verifiable.

– Anil Menon, SVP & Global President, Smart+Connected Communities, Cisco

FogHorn Systems

Sastry Malladi, CTO of FogHorn Systems, has shared his top five predictions for the IIoT in 2018.

1. Momentum for edge analytics and edge intelligence in the IIoT will accelerate in 2018.

Almost every notable hardware vendor has a ruggedized line of products promoting edge processing. This indicates that the market is prime for Industrial IoT (IIoT) adoption. With technology giants announcing software stacks for the edge, there is little doubt that this momentum will only accelerate during 2018. Furthermore, traditional industries, like manufacturing, that have been struggling to showcase differentiated products, will now embrace edge analytics to drive new revenue streams and/or significant yield improvements for their customers.

2. Additionally, any industry with assets being digitized and making the leap toward connecting or instrumenting brownfield environments is well positioned to leverage the value of edge intelligence.

Usually, the goal of these initiatives is to have deep business impact. This can be delivered by tapping into previously unknown or unrealized efficiencies and optimizations. Often these surprising insights are uncovered only through analytics and machine learning. Industries with often limited access to bandwidth, such as oil and gas, mining, fleet and other verticals, truly benefit from edge intelligence.

3. Business cases and ROI are critical for IIoT pilots and adoption in 2018

The year 2017 was about exploring IIoT and led to the explosion of proof of concepts and pilot implementations. While this trend will continue into 2018, we expect increased awareness about the business value edge technologies bring to the table. Companies that have been burned by the “Big Data Hype” – where data was collected but little was leveraged – will assess IIoT engagements and deployments for definitive ROI. As edge technologies pick up speed in proving business value, the adoption rate will exponentially rise to meet the demands of ever-increasing IoT applications.

IIoT standards will be driven by customer successes and company partnerships

4. IT and OT teams will collaborate for successful IIoT deployments

IIoT deployments will start forcing closer engagement between IT and operations technology (OT) teams. Line of business leaders will get more serious around investing in digitization, and IT will become the cornerstone required for the success of these initiatives. What was considered a wide gap between the two sectors – IT and OT – will bridge thanks to the recognized collaboration needed to successfully deploy IIoT solutions and initiatives.

5. Edge computing will reduce security vulnerabilities for IIoT assets.

While industries do recognize the impact of an IIoT security breach there is surprisingly little implementation of specific solutions. This stems from two emerging trends:

a) Traditional IT security vendors are still repositioning their existing products to address IIoT security concerns.

b) A number of new entrants are developing targeted security solutions that are specific to a layer in the stack, or a particular vertical.

This creates the expectation that, if and when an event occurs, these two classes of security solutions are sufficient enough. Often IoT deployments are considered greenfield and emerging, so these security breaches still seem very futuristic, even though they are happening now. Consequently, there is little acceleration to deploy security solutions, and most leaders seem to employ a wait-and-watch approach. The good news is major security threats, like WannaCry, Petya/Goldeneye and BadRabbit, do resurface IIoT security concerns during the regular news cycle. However, until security solutions are more targeted, and evoke trust, they may not help move the needle.