by Gary Mintchell | Nov 2, 2017 | Internet of Things, Manufacturing IT, Operations Management, Operator Interface, Software, Technology

Gathering data, visualization on many devices and screens, and connecting with standards including OPC UA and BACnet attracted a crowd of developers and users to the Iconics World Wide Customer Conference this week in Providence, RI.

“Connected Intelligence is our theme at this year’s summit and it has a dual meaning for us,” said Russ Agrusa, President and CEO of Iconics. “First, it refers to our extensive suite of automation software itself and how it provides out-of-the-box solutions for visualization, mobility, historical data collection, analytics and IIoT. The second point is that Iconics, over the last 30 years, has built a community of partners and customers who will have the opportunity to meet our software designers and other employees and have one-on-one discussions on such topics as; Industry 4.0, IIoT, cloud computing, artificial intelligence (AI) and the latest advances in automation software technology. It is truly a high energy and exciting event.”

Key technologies showcased at the Iconics Connected Intelligence Customer Summit included:

1. Industry 4.0 and the Industrial Internet of Things

2. Unlocking data and making the invisible, visible

3. Secure strategies and practices for industrial, manufacturing and building automation

4. Predictive AnalytiX using expert systems such as FDD and AI Machine Learning

5. Hot, warm and cold data storage with plant historians for the cloud and IIoT

Integration With AR, VR, and Mixed Reality Tech

The recent v10.95 release of GENESIS64 HMI/SCADA and building automation suite includes 3D holographic machine interface (HMI), which can be used with Microsoft’s HoloLens self-contained holographic computing device. This combination of Iconics software with Microsoft hardware allows users to visualize real-time data and analytics KPIs in both 2D and 3D holograms. When combined with Iconics AnalytiX software, users can take advantage of additional fault detection and diagnostics (FDD) and Hyper Historian data historian benefits, providing needed “on the spot” information in a hands-free manner.

“These new hands-free and mixed reality devices enable our customers and partners to ‘make the invisible visible’,” said Russ Agrusa, President and CEO of ICONICS. “There is a massive amount of information and value in all that collected and real-time data. Data is the new currency and we make it very easy to uncover this untapped information. We welcome this year’s summit attendees to get a glimpse at the future of HMI wearable devices such as Microsoft’s HoloLens and RealWear HMT1, HP and Lenovo Virtual reality devices.”

Mobile-Head-mounted tablet-style device

The V10.95 release of GENESIS64 HMI/SCADA and building automation suite includes Any Glass technology, which can be used with self-contained head-wearable computing devices. HMT-1 from RealWear demonstrated the visualization of real-time and historical data KPIs with voice driven, hands-free usage.

Featuring an intuitive, completely hands-free interface, the RealWear HMT-1 is a rugged head-worn solution for industrial IoT data visualization, remote video collaboration, technical documentation, assembly and maintenance instructions and streamlined inspections right to the eyes and ears of workers in harsh and loud field and manufacturing environments.

Support for multiple OSs and devices

Iconics has always been Microsoft Windows application and will continue to do so. However, IoTWorX Industrial Internet of Things (IIoT) software automation suite includes support for multiple operating systems including Windows 10 IoT Enterprise and Windows 10 IoT Core, as well as a large variety of Linux embedded operating systems including Ubuntu and Raspbian.

Users can connect to virtually any automation equipment through supported industry protocols such as BACnet, SNMP, Modbus, OPC UA, and classic OPC Tunneling. Iconics’ IoT solution takes advantage of Microsoft Azure cloud services to provide global visibility, scalability, and reliability. Optional Microsoft Azure services such as Power BI and Machine Learning can also be integrated to provide greater depth of analysis.

The following Operating systems are currently being certified for IoTWorX:

• Windows 10 IoT Enterprise

• Windows 10 IoT Core

• Red Hat Enterprise Linux 7

• Ubuntu 17.04, Ubuntu 16.04, Ubuntu 14.04

• Linux Mint 18, Linux Mint 17

• CentOS 7

• Oracle Linux 7

• Fedora 25, Fedora 26

• Debian 8.7 or later versions, openSUSE 42.2 or later versions

• SUSE Enterprise Linux (SLES) 12 SP2 or later versions

Hot, Warm, Cold Data Storage

Hyper Historian data historian integrates with and supports Microsoft Azure Data Lake for more data storage, archiving and retrieval.

When real-time “hot” data is collected at the edge by IoT devices and other remote collectors, it can then be securely transmitted to “warm” data historians for mid-term archiving and replay. Hyper Historian now features the ability to archive to “cold” long-term data storage systems such as data lakes, Hadoop or Azure HD Insight. These innovations help to make the best use of historical data at any stage in the process for further analysis and for use with machine learning.

Analytics

Among the new analytical features are a new 64-bit BridgeWorX64 data bridging tool, a new 64-bit ReportWorX64 reporting tool, several new Energy AnalytiX asset performance charts and usability improvements. In addition, Iconics has introduced a new BI Server.

• AnalytiX-BI – Provides data aggregation using data modeling and data sets

• ReportWorX64 – Flexible, interactive, drag & drop, drill-down reporting dashboards

• BridgeWorX64 – Data Bridging and with drag-and-drop workflows that can be scheduled

• Smart Energy AnalytiX – a SaaS based energy and facility solution for buildings

• Smart Alarm AnalytiX – a SaaS based alarming analysis product that uses EEMUA

by Gary Mintchell | Feb 1, 2017 | Automation, Manufacturing IT, Operations Management, Operator Interface

One of my customers back in the 90s established an OEE office and placed an OEE engineer in each plant. OEE, of course is the popular abbreviation for Overall Equipment Effectiveness—a sum of ratios that places a numerical value on “true” productivity. I’ve always harbored some reservations about OEE, especially as a comparative metric, because of the inherent variability of inputs. Automated data collection and modern data base analytics are a solution.

One of my customers back in the 90s established an OEE office and placed an OEE engineer in each plant. OEE, of course is the popular abbreviation for Overall Equipment Effectiveness—a sum of ratios that places a numerical value on “true” productivity. I’ve always harbored some reservations about OEE, especially as a comparative metric, because of the inherent variability of inputs. Automated data collection and modern data base analytics are a solution.

A press release and email conversation with Parsec came my way this week. It sets the stage by pointing to the pressure to increase quality and quantity, while reducing costs, leading manufacturers to seek a deeper understanding of trends and patterns and new ways to drive efficiency. The very nature of OEE is to identify the percentage of manufacturing time that is truly productive. It is the key metric for measuring the performance of an operation, but many companies measure it incorrectly, or don’t measure it at all.

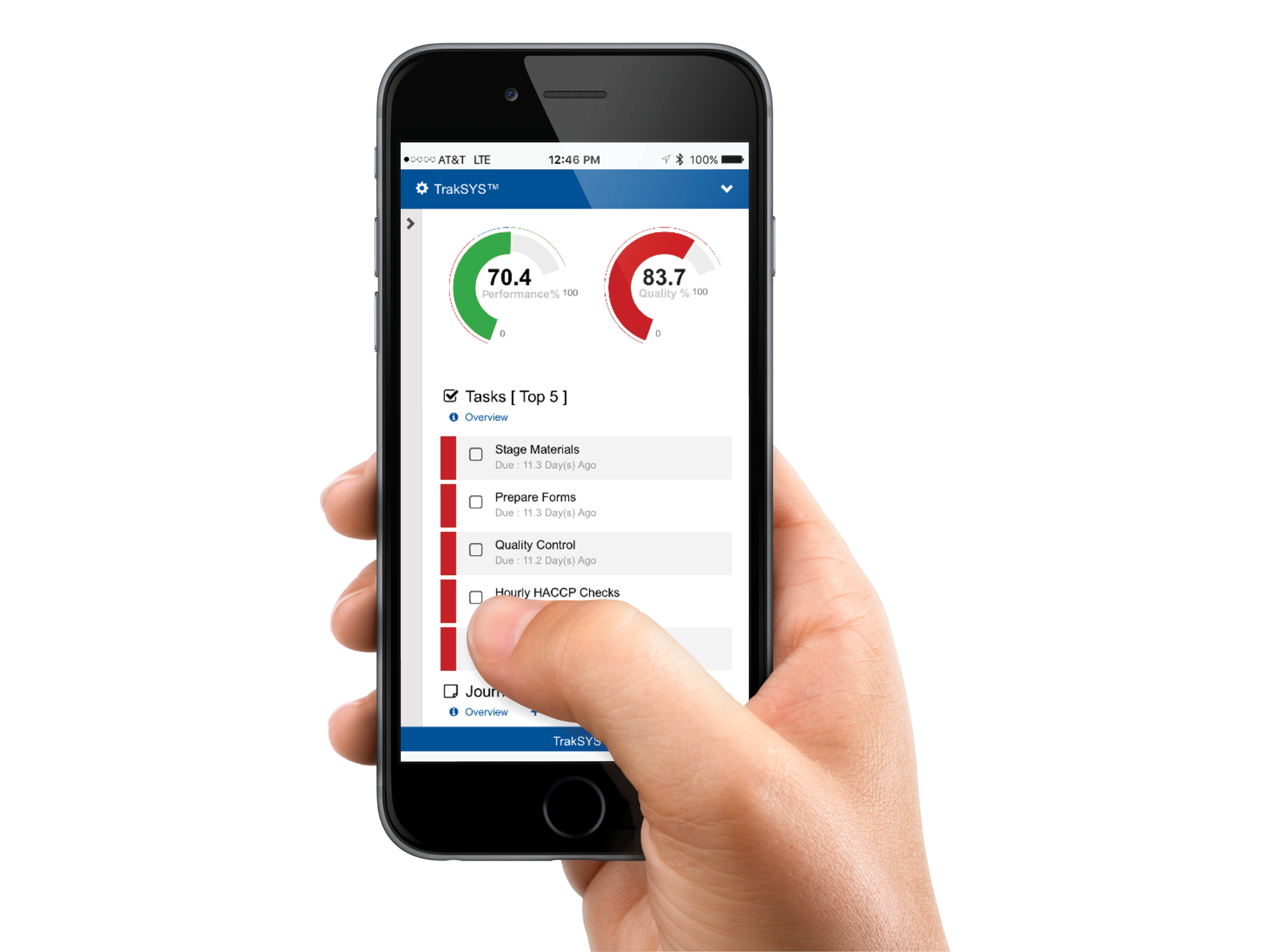

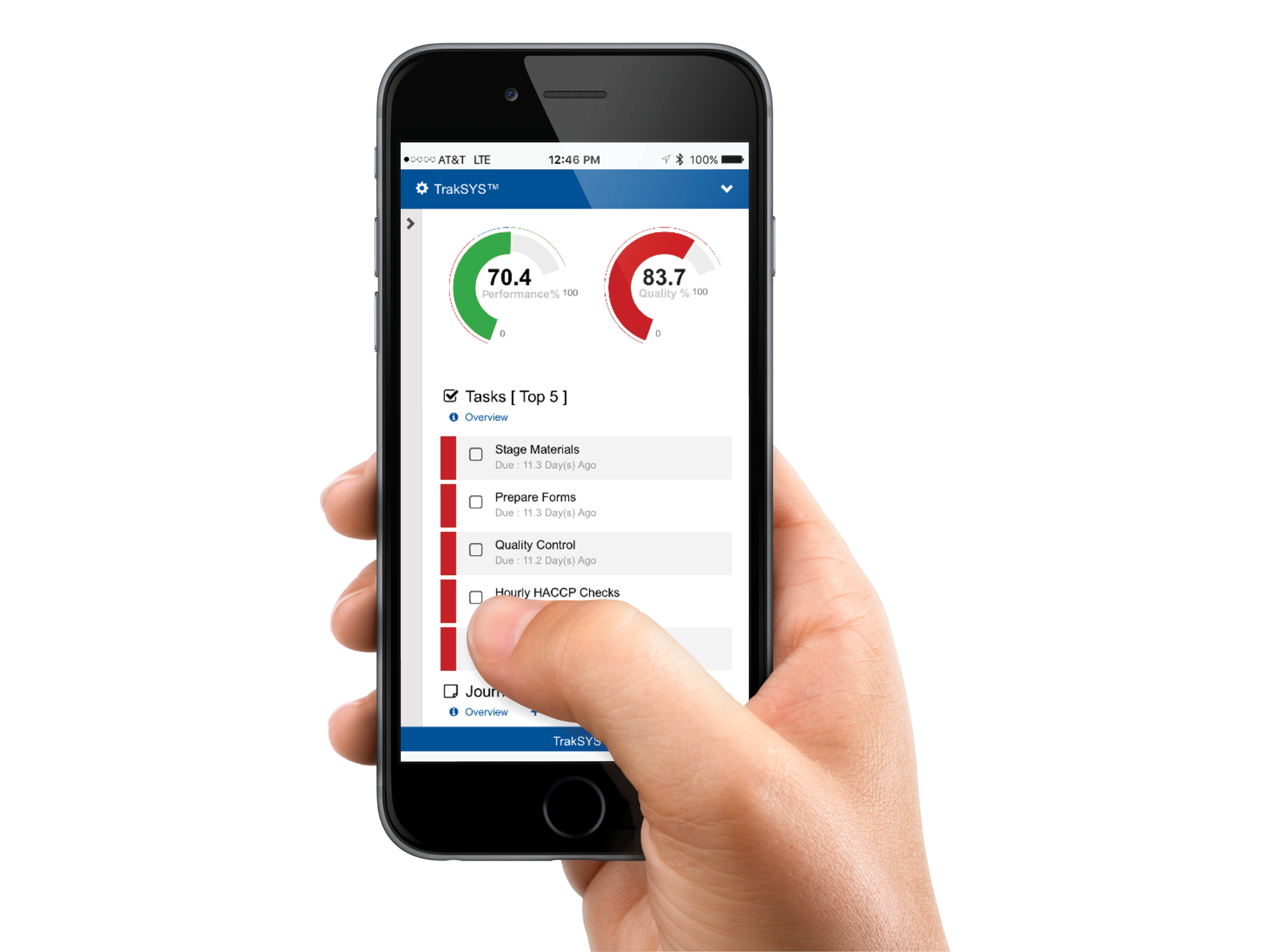

In the latest example of its efforts to help manufacturers maximize performance while reducing costs and complexity, Parsec launched its real-time Overall Equipment Effectiveness (OEE) Performance Management solution.

Most OEE measurement systems capture data from a single source and offer reports that may be visually appealing but actually contain very little substance. Other OEE systems capture lots of data but fail to give operators the necessary tools to act on that data. The TrakSYS OEE Performance Management solution collects and aggregates data from multiple sources, leveraging existing assets, resources and infrastructure, and provides insight into areas of the operation that need improvement with the tools to take action.

“We are challenging manufacturers to go beyond OEE measurement and to begin thinking about performance management,” said Gregory Newman, Parsec vice president of marketing. “Our TrakSYS OEE Performance Management solution pinpoints the root causes of poor performance and closes the loop by providing actionable intelligence and the tools necessary to fix the bottlenecks and improve productivity.”

The Power to Perform

When designing the TrakSYS OEE Performance Management solution, Parsec took into account three key criteria for measuring OEE: Availability, Performance and Quality. Availability, or downtime loss, encompasses changeovers, sanitation/cleaning, breakdowns, startup/shutdown, facility problems, etc. Performance, or speed loss, includes running a production system at a speed lower than the theoretical run rate, and short stop failures such as jams and overloads. Quality, or defect loss, is defined as production and startup rejects, process defects, reduction in yield, and products that need to be reworked to conform to quality standards. As part of the solution, Parsec created a variety of standard dashboards and reports as well as the ability to customize reports through powerful web-based configuration tools.

When designing the TrakSYS OEE Performance Management solution, Parsec took into account three key criteria for measuring OEE: Availability, Performance and Quality. Availability, or downtime loss, encompasses changeovers, sanitation/cleaning, breakdowns, startup/shutdown, facility problems, etc. Performance, or speed loss, includes running a production system at a speed lower than the theoretical run rate, and short stop failures such as jams and overloads. Quality, or defect loss, is defined as production and startup rejects, process defects, reduction in yield, and products that need to be reworked to conform to quality standards. As part of the solution, Parsec created a variety of standard dashboards and reports as well as the ability to customize reports through powerful web-based configuration tools.

“Our goal is to empower manufacturers to unlock unseen potential with their existing infrastructure,” added Newman. “Even small tweaks can save a plant millions of dollars each year.”

TrakSYS is an integrated platform that contains all of the functionality of a full manufacturing execution system (MES) in one package. The modular nature of TrakSYS brings flexibility to deploy only the functions that are required, without a major software upgrade. TrakSYS business solutions include OEE, SPC, e-records, maintenance, traceability, workflow, batch processing, sustainability, labor, and more.

www.parsec-corp.com

by Gary Mintchell | Sep 13, 2016 | Automation, News, Operator Interface, Technology

Rockwell Automation has announced acquisition of Automation Control Products (ACP), a provider of centralized thin client, remote desktop and server management software. ACP’s two core products, ThinManager and Relevance, provide manufacturing and industry with visual display and software solutions to, as the Rockwell press release put it, “manage information and streamline workflows for a more connected manufacturing environment.”

I met Matt Crandell, ACP CEO, years ago at a trade show touting Linux, as I recall, to a Microsoft crowd. He and his team had developed a thin client technology (“dumb” terminals connected to a server) that brought 1970s and 80s era corporate computing into the modern age. He had good relations with Wonderware but I’ve noticed increasingly strong partnership with Rockwell Automation. This exit was probably the best he could hope for. Congratulations to Matt and the team for a good run and a good exit.

The press release gives us Rockwell’s justification, “This acquisition supports the Rockwell Automation growth strategy to help customers increase global competitiveness through The Connected Enterprise – a vision that connects information across the plant floor to the rest of the enterprise. It is accelerated by the Industrial Internet of Things and advances in technologies, such as data analytics, remote monitoring, and mobility.”

“Today’s plant engineers turn to our technology innovation and domain expertise to help improve their manufacturing quality and reliability while increasing productivity,” said Frank Kulaszewicz, senior vice president of Architecture and Software, Rockwell Automation. “With ACP’s industry-leading products now in our portfolio, we can provide new capabilities for workers as the manufacturing environment becomes more digital and connected.”

ThinManager centralizes the management and visualization of content to every facet of a modern manufacturing operation, from the control room to the end user. It streamlines workflows and allows users to reduce hardware operation and maintenance costs. Relevance extends the ThinManager functionality through proprietary location-based technology, enabling users’ secure mobile access to content and applications from anywhere.

“We are a perfect addition to Rockwell Automation’s industrial automation offerings that aim to increase reliability, productivity and security as well as lower energy and maintenance costs while implementing sustainable technology for leading global manufacturers,” said Matt Crandell, CEO of ACP. “We are confident that our customers will quickly see the value from our two organizations working to address their needs together.”

by Gary Mintchell | Sep 9, 2016 | Automation, Operations Management, Operator Interface, Process Control

Plant operators have been isolated in remote control rooms for decades. They tend to lose intimate knowledge of their processes as they monitor computer screens in these isolated rooms. The sounds and smells are gone. Everything is theoretical.

This system has worked. But, is it the best, most efficient, most effective use of human intelligence?

Not likely. Technologies and work processes are joining to allow plant managers to change all this.

Tim Sowell, VP and Fellow at Schneider Electric, recently shared some more of his prescient thoughts on this issue–spurred as usual by conversations with customers.

He asks, “What is the reason why users have been locked to the desk/ control room, why has this transition not happened successfully before? It is simple, the requirement to be monitoring the plant. A traditional control room is the central place alarms, notifications were traditionally piped.”

Diving into what it takes to change, Sowell goes on to say, “The user needs to empowered with situational plant awareness, freed from monitoring, shifting to the experience of exception based notification. As the user roams the plant, the user is still responsible, and aware, and able to make decisions across the plant he is responsible for even if he not in view of that a particular piece of equipment. Driving the requirement for the mobile device the user carries to allow notification, drill thru access to information and ability to collaborate so the dependency to sit in the control room has lifted.”

Two streams join to form a river. Sowell continues, “The second key part of the transformation/ enablement of an edge worker is that their work, tasks and associated materials can transfer with them. As the world moves to planned work, a user may start work task on a PC in the control room, but now move out to execute close action. As the user goes through the different steps, the associated material and actions are at his finders tips. The operational work can be generated , assigned and re directed from all terminal.s and devices.”

The first generation of this thinking formed around the rapid development of mobile devices. Before plant managers and engineers could come to grips with one technology, the next popped up. Instead of careful and prolonged development by industrial technology providers, these devices came directly from consumers. Operators and maintenance techs and engineers brought them from home. Smart phones–the power of a computer tucked into their pockets.

Sowell acknowledges it takes more than a handheld computer. “It requires the transformation to task based integrated operational environment where the ‘edge worker’ is free to move.”

The information must free them to navigate with freedom, no matter the format, no matter where they start an activity, and have access to everything.

by Gary Mintchell | Mar 14, 2016 | Automation, Operator Interface

“Siri, what’s the weather in Bangor?”

“Alexa, buy some toilet paper.”

“Zelda, check the status of the control loop at P28.”

Operator interface is many years removed from its last significant upgrade. Yes, the Abnormal Situation Management Consortium (led by Honeywell) and Human-Centered Design used by Emerson Process Management and the work of the Center for Operator Performance have all worked on developing more readable and intuitive screens.

But, there is something more revolutionary on the horizon.

A big chunk of time last week on the Gillmor Gang, a technology-oriented video conversation, discussed conversational interfaces. Apple’s Siri has become quite popular. Amazon Echo (Alexa) has gained a large following.

Voice activation for operator interface

Many challenges lie ahead for conversation (or voice) interfaces. Obviously many smart people are working on the technology. This may be a great place for the next industrial automation startup. This or bots. But let’s just concentrate on voice right now.

Especially look at how the technologies of various devices are coming together.

I use the Apple ecosystem, but you could do this in Android.

Right now my MacBook Air, iPad, and iPhone are all interconnected. I shoot a photo on my iPhone and it appears in my Photos app on the other two. If I had an Apple Watch, then I could communicate through my iPhone verbally. It’s all intriguing.

I can hear all the objections, right now. OK, Luddites <grin>, I remember a customer in the early 90s who told me there would never be a wire (other than I/O) connected to a PLC in his plant. So much for predictions. We’re all wired, now.

What have you heard or seen? How close are we? I’ve done a little angel investing, but I don’t have enough money to fund this. But for a great idea…who knows?

Hey Google, take a video.

by Gary Mintchell | Jan 21, 2016 | Automation, Operator Interface, Technology

Apps are so last year. Now the topic of the future appears to be bots and conversational interfaces (Siri, etc.). Many automation and control suppliers have added apps for smart phones. I have a bunch loaded on my iPhone. How many do you have? Do you use them? What if there were another interface?

I’ve run across two articles lately that deal with a coming new interface. Check them out and let me know what you think about these in the context of the next HMI/automation/control/MES generations.

Sam Lessin wrote a good overview at The Information (that is a subscription Website, but as a subscriber I can unlock some articles) “On Bots, Conversational Apps, and Fin.”

Lessin looks at the history of personal computing from shrink wrapped applications to the Web to apps to bots. Another way to look at it is client side to server side to client side and now back to server side. Server side is easier for developers and removes some power from vertical companies.

Lessen also notes a certain “app fatigue” where we have loaded up on apps on our phones only to discover we use only a fraction of them.

I spotted this on Medium–a new “blogging” platform for non-serious bloggers.

It was written by Ryan Block–former editor-in-chief of Engadget, founder of gdgt (both of which sold to AOL), and now a serial entrepreneur.

He looks at human/computer interfaces, “People who’ve been around technology a while have a tendency to think of human-computer interfaces as phases in some kind of a Jobsian linear evolution, starting with encoded punch cards, evolving into command lines, then graphical interfaces, and eventually touch.”

Continuing, “Well, the first step is to stop thinking of human computer interaction as a linear progression. A better metaphor might be to think of interfaces as existing on a scale, ranging from visible to invisible.”

Examples of visible interfaces would include the punchcard, many command line interfaces, and quite a bit of very useful, but ultimately shoddy, pieces of software.

Completely invisible interfaces, on the other hand, would be characterized by frictionless, low cognitive load usage with little to no (apparent) training necessary. Invisibility doesn’t necessarily mean that you can’t physically see the interface (although some invisible interfaces may actually be invisible); instead, think of it as a measure of how fast and how much you can forget that the tool is there at all, even while you’re using it.

Examples of interfaces that approach invisibility include many forms of messaging, the Amazon Echo, the proximity-sensing / auto-locking doors on the Tesla Model S, and especially the ship computer in Star Trek (the voice interface, that is — not the LCARS GUI, which highly visible interface. Ahem!).

Conversation-driven product design is still nascent, but messaging-driven products are still represent massive growth and opportunity, expected to grow by another another billion users in the next two years alone.

For the next generation, Snapchat is the interface for communicating with friends visually, iMessage and Messenger is the interface for communicating with friends textually, and Slack is (or soon will be) the interface for communicating with colleagues about work. And that’s to say nothing of the nearly two billion users currently on WhatsApp, WeChat, and Line.

As we move to increasingly invisible interfaces, I believe we’ll see a new class of messaging-centric platforms emerge alongside existing platforms in mobile, cloud, etc.

As with every platform and interface paradigm, messaging has its own unique set of capabilities, limitations, and opportunities. That’s where bots come in. In the context of a conversation, bots are the primary mode for manifesting a machine interface.

Organizations will soon discover — yet again — that teams want to work the way they live, and we all live in messaging. Workflows will be retooled from the bottom-up to optimize around real-time, channel based, searchable, conversational interfaces.

Humans will always be the entities we desire talking to and collaborating with. But in the not too distant future, bots will be how things actually get done.

One of my customers back in the 90s established an OEE office and placed an OEE engineer in each plant. OEE, of course is the popular abbreviation for Overall Equipment Effectiveness—a sum of ratios that places a numerical value on “true” productivity. I’ve always harbored some reservations about OEE, especially as a comparative metric, because of the inherent variability of inputs. Automated data collection and modern data base analytics are a solution.

One of my customers back in the 90s established an OEE office and placed an OEE engineer in each plant. OEE, of course is the popular abbreviation for Overall Equipment Effectiveness—a sum of ratios that places a numerical value on “true” productivity. I’ve always harbored some reservations about OEE, especially as a comparative metric, because of the inherent variability of inputs. Automated data collection and modern data base analytics are a solution.

When designing the TrakSYS OEE Performance Management solution, Parsec took into account three key criteria for measuring OEE: Availability, Performance and Quality. Availability, or downtime loss, encompasses changeovers, sanitation/cleaning, breakdowns, startup/shutdown, facility problems, etc. Performance, or speed loss, includes running a production system at a speed lower than the theoretical run rate, and short stop failures such as jams and overloads. Quality, or defect loss, is defined as production and startup rejects, process defects, reduction in yield, and products that need to be reworked to conform to quality standards. As part of the solution, Parsec created a variety of standard dashboards and reports as well as the ability to customize reports through powerful web-based configuration tools.

When designing the TrakSYS OEE Performance Management solution, Parsec took into account three key criteria for measuring OEE: Availability, Performance and Quality. Availability, or downtime loss, encompasses changeovers, sanitation/cleaning, breakdowns, startup/shutdown, facility problems, etc. Performance, or speed loss, includes running a production system at a speed lower than the theoretical run rate, and short stop failures such as jams and overloads. Quality, or defect loss, is defined as production and startup rejects, process defects, reduction in yield, and products that need to be reworked to conform to quality standards. As part of the solution, Parsec created a variety of standard dashboards and reports as well as the ability to customize reports through powerful web-based configuration tools.