by Gary Mintchell | Apr 6, 2015 | Automation, Interoperability, Manufacturing IT, Networking, Operations Management, Technology

There are many new and cool open source projects going on right now. These are good opportunities for those of you who program to get involved. Or…you could take a hint and turn your passion into an open source project.

I’ve written three articles since November on the subject:

• Open Source Tools Development

• Open Source SCADA

• Open Source OPC UA for manufacturing

Sten Gruener wrote about yet another OPC UA open source project. This one seems to be centered in Europe (but everything on the Web is global, right?). This is an open source and free C (C99) implementation of OPC UA communication stack licensed under LGPL + static linking exception. A brief description:

Open

• stack design based solely on IEC 62541

• licensed under open source (LGPL & static linking exception)

• royalty free, available on GitHub

Scalable

• single or multi-threaded architecture

• one thread per connection/session

Maintainable

• 85% of code generated from XML specification files

Portable

• written in C99 with POSIX support

• compiled server is smaller than 100kb

• runs on Windows (x86, x64), Linux (x86, x64, ARM e.g. Raspberry Pi, SPARCstation), QNX and Android

Extensible

dynamically loadable and reconfigurable user models

Background Information

OPC UA (short for OPC Universal Architecture) is a communication protocol originally developed in the context of industrial automation.

OPC UA has been released as an “open” standard (meaning everybody can buy the document) in the IEC 62541 series. As of late, it is marketed as the one standard for non-realtime industrial communication.

Remote clients can interact with a Server by calling remote Services. (The services are different from a remote procedure call that is provided via the “Call” service.) The server contains a rich information model that defines an object system on top of an ontology-like set of nodes and references between nodes. The data and its “meta model” can be inspected to discover variables, objects, object types, methods, data types, and so on. Roughly, the Services provide access to:

- Session management

- CRUD operations on the node level

- Remote procedure calls to methods defined in the address space

- Subscriptions to events and variable changes where clients are notified via push messages.

The data structures the services process as in- and output can be encoded either as a binary stream or in XML. They are transported via a TCP-based custom protocol or via Webservices. Currently, open62541 supports only the binary encoding and TCP-based transport.

by Gary Mintchell | Mar 6, 2015 | Automation, Data Management, Operations Management, Technology

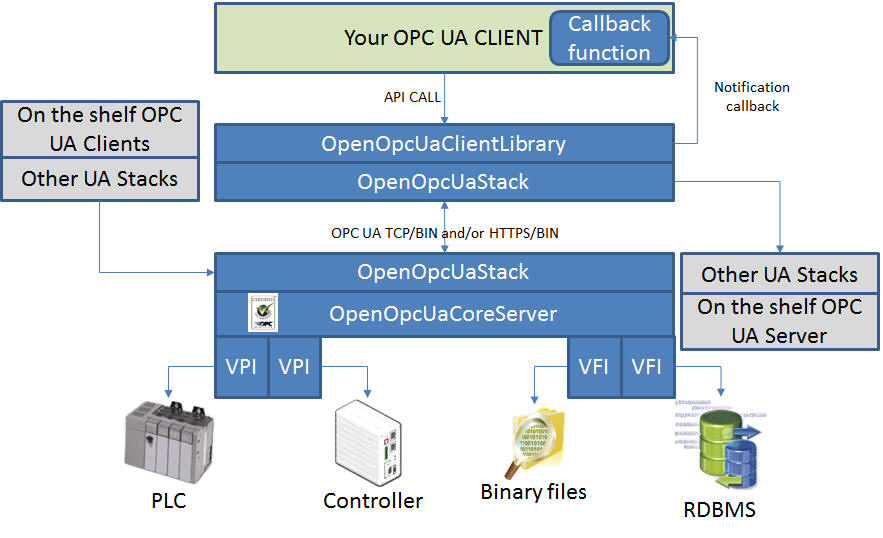

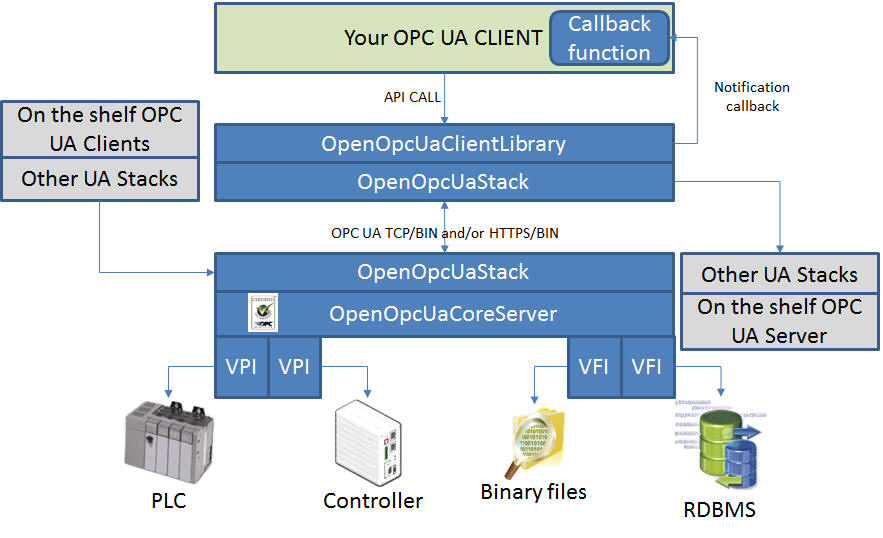

Here is a manufacturing open-source project that somehow was under my radar. Perhaps that is because it began in Europe? It’s an open-source OPC UA platform–OpenOpcUa.

Here is a manufacturing open-source project that somehow was under my radar. Perhaps that is because it began in Europe? It’s an open-source OPC UA platform–OpenOpcUa.

This looks interesting. Also looks like it is growing legs.

Here is its description–I love the first line:

First remember that Open is different than Free.

OpenOpcUa is an initiative launched in 2009 by a consortium of international companies led by Michel Condemine (4CE Industry). The objectives of this consortium are multiple:

- Create a professional quality codebase implementing OpcUa concept

- Help users understand OpcUa technology

Today OpenOpcUaCoreServer is the only one Open Source OPC UA server certified by the OPC Foundation Compliance Test Tool.

And here is further description:

Open Source C/C++ codebase for OPC UA product development.

The OpenOpcUa is an Open Source codebases (CECILL-C Licensed with no Fork option) that makes the OPC UA development easy. It’s available on Windows, Windows CE, Linux and VXWorks. With OpenOpcUa codebase you can create client and/or server. Because Open not means free. So to access the OpenOpcUa codebase you have to pay a one time fee. more detail here

-

The same codebase for all platforms

-

A server ready to use

-

Extension using a toolset for driver development

-

Powerful API for client development

-

Supports exisiting and future UA information models without recompilation.

-

UA information models loaded dynamically from XML file conform to the standardize UANodeSet.xsd

-

A common project supported by worldwide companies

-

OpenOpcUa code base is compliant with the OPC Foundation Compliance Test Tool (CTT)

If you have further information about this project, please let me know.

by Gary Mintchell | Feb 27, 2015 | Operations Management, Software, Standards

Looks like there is a debate in the software development community again. This time around node.js

Dave Winer is a pioneer in software development. I used his first blogging platform, Radio Userland, from 2003 until about 2009 when it closed and I moved first to SquareSpace and then to WordPress. Below I point to a discussion about whether the node.js community needs a foundation.

His points work out for manufacturing software development, too. Groups of engineers gather to solve a problem. The problem usually involves opening up to some level of interoperability.

This is a double-edged sword for major suppliers. They’d prefer customers buy all their solutions from them. And, yes, if you control all the technology, you can make communications solider, faster. However, no supplier supplies all the components a customer wants. Then some form of interoperability is required.

Therefore, technologies such as OPC, HART, CIP, and the like. These all solved a problem and advanced the industry.

There are today still more efforts by engineers to write interoperability standards. If these worked, then owner/operators would be able to move data seamlessly, or almost seamlessly, from application to application solving many business problems.

Doing this, however, threatens the lucrative market of high-end consultants whose lock-in of custom code writing and maintenance is a billion-dollar business. Therefore, their efforts to prevent adoption of standards.

Winer nails all this.

I am new to Node but I also have a lot of experience with the dynamics [Eran] Hammer is talking about, in my work with RSS, XML-RPC and SOAP. What he says is right. When you get big companies in the loop, the motives change from what they were when it was just a bunch of ambitious engineers trying to build an open underpinning for the software they’re working on. All of a sudden their strategies start determining which way the standard goes. That often means obfuscating simple technology, because if it’s really simple, they won’t be able to sell expensive consulting contracts.

He was right to single out IBM. That’s their main business. RSS hurt their publishing business because it turned something incomprehensible into something trivial to understand. Who needs to pay $500K per year for a consulting contract to advise them on such transparent technology? They lost business.

IBM, Sun and Microsoft, through the W3C, made SOAP utterly incomprehensible. Why? I assume because they wanted to be able to claim standards-compliance without having to deal with all that messy interop.

As I see it Node was born out of a very simple idea. Here’s this great JavaScript interpreter. Wouldn’t it be great to write server apps in it, in addition to code that runs in the browser? After that, a few libraries came along, that factored out things everyone had to do, almost like device drivers in a way. The filesystem, sending and receiving HTTP requests. Parsing various standard content types. Somehow there didn’t end up being eight different versions of the core functionality. That’s where the greatness of Node comes from. We may look back on this having been the golden age of Node.

by Gary Mintchell | Dec 5, 2014 | Data Management, Manufacturing IT, Operations Management, Software

Schneider Electric has released Wonderware SmartGlance 2014 R2 mobile reporting manufacturing software. The updated version includes a host of new features including support for wearable technologies, a modern user interface for any browser, self-serve registration, support for multiple time zones for a global user base and full import and export capabilities for even faster deployment.

Schneider Electric has released Wonderware SmartGlance 2014 R2 mobile reporting manufacturing software. The updated version includes a host of new features including support for wearable technologies, a modern user interface for any browser, self-serve registration, support for multiple time zones for a global user base and full import and export capabilities for even faster deployment.

“Plant personnel are now mobile so they require immediate access to real-time operations information via their smart phone, tablet or whatever mobile device they carry,” said Saadi Kermani, Wonderware SmartGlance product manager, Schneider Electric. “Wonderware SmartGlance 2014 R2 software delivers highly relevant information coming from industrial data sources to targeted plant workers in the form of personalized charts, reports and alerts. It provides them with the flexibility they need to view and instantly collaborate around real-time plant data on any device so they can make rapid, effective decisions.”

With a small install footprint and no additional hardware requirements, the Wonderware SmartGlance implementation process is fast and simple. MyAlerts, the software’s newest mobile app feature, proactively notifies users of process events so they can stay current with real-time information based on configurable thresholds for tag reports. The software can be used with smart watches to alert mobile and remote field workers, plant supervisors and managers of critical production and process information in a real-time, hands-free manner.

By leveraging the combined power of mobility and Schneider Electric’s cloud-hosted managed services, the software empowers mobile and remote users with the right information at the right time, without disruption or distraction, so they can quickly assign resources and resolve issues. It also features an open interface to connect and push data to mobile devices from virtually any data source, including historians, manufacturing execution systems, enterprise manufacturing intelligence systems or any real-time system of record. It also provides connectors for accessing data from any SQL database and any OPC-HDA-compatible system for better access to third-party data sources and systems. This most recent version also extends connectivity to key Schneider Electric software products, including its Viejo Citect SCADA offering, InStep PRiSM predictive asset analytics software and the InStep eDNA historian.

You might also check out my podcast interview with Kermani.

by Gary Mintchell | Nov 10, 2014 | Automation, Data Management, Manufacturing IT, News, Operations Management, Organizations, Standards, Technology

Collaboration works. Engineers and IT architects have been donating time to projects that stand to decrease the time from building large critical physical infrastructure assets to the operate and maintain phase. The resulting system could benefit owner/operators of those assets to the tune of millions of dollars.

Collaboration works. Engineers and IT architects have been donating time to projects that stand to decrease the time from building large critical physical infrastructure assets to the operate and maintain phase. The resulting system could benefit owner/operators of those assets to the tune of millions of dollars.

Much of the work has been under the radar, but also much has been accomplished. MIMOSA, the “Operations and Maintenance Information Open System Alliance” a 501(c)6 non-profit industry association, focuses on enabling industry solutions leveraging supplier neutral, open standards. The methodology is to establish an interoperable industrial ecosystem for Commercial Off The Shelf (COTS) solutions components provided by major industry suppliers.

As I wrote a few weeks ago, the most amazing thing about MIMOSA, the organization, and the Oil&Gas Interoperability Pilot (OGI Pilot) specifically, is the amount of progress they have made over the past few years. Some of the work has been ongoing for over a decade. Emphases have shifted over time reflecting the needs of the moment and the readiness of technology.

The premise of the work going on is that major productivity gains critical physical infrastructure design, build, operate and maintain depend on transitioning to an interoperable, componentized architecture with shared supplier-neutral industry information models, information and utility services.

Large enterprises are now spending 15x or more of license fees on integration efforts. The standards-based interoperability model will dramatically reduce these direct costs while also improving quality, security and sustainability.

This boils down to the core problem of lack of interoperability between key people, processes and systems.

Here are just some of the accomplishments:

- Achieved a better strategic alignment with the PCA and Fiatech organizations

- Built a broader consensus around downstream system architecture demonstrated through OGI Pilot

- Beginning to expand from its downstream work to working with the Oil & Gas Standards Leadership Council on upstream solutions as well

The long-running OGI Pilot program builds out a test bed and pilots the ecosystem of data standards. The pilot provides for a continuous gap analysis and drove out the need for a standardized data sheet. The pilot started with a process flow diagram (PFD) and worked out the P&ID including most of the schematic for the P&ID schema that are variations of ISO 15926. The next part of the project is to develop all the data sheets that describe all the parts in detail. That’s the reason for the Industry Standard Data Sheet project (ISDD). That will pick up mechanical, electronic, and thermodynamic information. Then the project returns to the PFD to pick streams data.

Thus far, the project has focused on the CAPEX side of the system. Work is now returning to the OPEX (operations and maintenance) side.

This all returns focus to the OpenO&M Initiative to bring testable interoperability for Business Object Document (BOD) architecture from OAGIS.

Everything is built upon real implementation specifications including PRODML (production markup language), B2MML (business to manufacturing markup language), MIMOSA, CCOM-ML (Common conceptual Object Model), ISBM (information service bus model), CIR (common interoperability registry), OPC UA.