by Gary Mintchell | Mar 24, 2016 | Automation, Internet of Things, Interoperability, Operations Management

Owner/Operators do see great benefits to standards for system-of-systems interoperability. Getting there is the problem.

Aaron Crews talked about what Emerson is doing along the lines I’ve been discussing. Tim Sowell of Schneider Electric Software hasn’t commented (that’s OK), but I’ve been looking at his blog posts and commenting for a while. He has been addressing some of these issues from another point of view. I’m interested in what the rest of the technology development community is working on.

Let’s take the idea of what various suppliers are working on and raise our point of view to that of an enterprise solutions architect. These professionals are concerned about what individual suppliers are doing, of course, but they also must make all of this work together for the common good.

Up until now, they must depend upon expensive integrators to piece all the parts together. So maybe they find custom ways to tie together Intergraph, SAP, Maximo, Emerson (just to pull a few suppliers out of the air) so that appropriate solutions can be devised for specific problems. And maybe they are still paying high rates for the integration while the integrator hires low cost engineering from India or other countries.

When the owner/operators see their benefits and decide to act, then useful interoperability standards can be written, approved, and implemented in such a way to benefit all parties.

Standards Driving Products

I think back to the early OMAC work of the late 90s. Here a few large end users wanted to drive down the cost of machine controllers that they felt were higher than the value they were getting. They wanted to develop a specification for a generic, commodity PLC. No supplier was interested. (Are we surprised?) In the end the customers didn’t drive enough value proposition to drive a new controller. (I was told behind the scenes that they did succeed in getting the major supplier to drop prices and everything else was forgotten.)

Another OMAC drive for an industry standard was PackML—a markup language for packaging machines. This one was closer to working. It did not try to dictate the inside of the control, but it merely provided an industry standard way of interfacing with the machine. That part was successful. However, two problems ensued. A major consumer products company put it in the spec, but that did not guarantee that purchasing would open up bidding to other suppliers. Smaller control companies hoped that following the spec would level the playing field and allow them to compete against the majors. In the end, nothing much changed—except machine interface did become more standardized from machine to machine greatly aiding training and workforce deployment problems for CPG manufacturers.

These experiences make me pay close attention to the ExxonMobil / Lockheed Martin quest for a “commodity” DCS system. Will this idea work this time? Will there be a standard specification for a commodity DCS?

Owner/Operator Driven Interoperability

I say all that to address the real problem—buy in by owner/operators and end users. if they drive compliance to the standard, then change will happen.

Satish commented again yesterday, “I could see active participation of consultants and vendors than that of the real end users. Being a voluntary activity, my personal reading is standards are more influenced by organizations who expect to reap benefit out of it by investing on them. Adoption of standard could fine tune it better and match it to the real use but it takes much longer! The challenge is : How to ensure end user problems are in the top of the list for developing / updating a standard?”

He is exactly right. The OpenO&M and MIMOSA work that I have been referencing is build upon several ISO standard, but, importantly, has been pushed by several large owner/operators who project immense savings (millions of dollars) by implementing the ecosystem. I have published an executive summary white paper of the project that you can download. A more detailed view is in process.

I like Jake Brodsky’s comment, “I often refer to IoT as ‘SCADA in drag.’ ” Check out his entire comment on yesterday’s post. I’d be most interested in following up on his comment about mistakes SCADA people made and learned from that the IoT people could learn. That would be interesting.

by Gary Mintchell | Mar 23, 2016 | Automation, Internet of Things, Interoperability, Operations Management

A couple of people commented on my protocol wars post I published yesterday. I’ll address a couple of comments and then push on toward some further enterprise level conclusions.

Satish wrote: “Nice summary Gary! Standardization is a double edged sword for vendors and always there is a cautious approach. You know what happened with Real time Ethernet, Field bus & ISA 100 standards. It will take its time to mature when customers demand it and vendors are happy with the ROI. I could see how vendors make money in integration through OPC by supporting the standard but adding cost when it comes to usage of it (fine letter prints?). Probably when automation system becomes COTS system it will be a drastic change – XOM’s project with Lockheed Martin is aimed at it, am not sure!”

Satish pointed out a couple of sore spots regarding standards. Actually standards sometimes get a bad rap. Sometime they deserve it. I don’t care to get deeply into any of them, but, for example, the wireless sensor network process was one of the worst examples in the past 20 years. Eventually the industry standard WirelessHART dominated simply due to market forces. From a technology point of view, there just wasn’t that great of a difference. We can think of others. Sometimes it is simply obfuscation on the part of one vendor or another.

However, he is correct talking about the tension of standards and suppliers. Suppliers think that they can provide a better end-to-end solution by keeping everything within their sandpile. Sometimes they can. All of us who have been on the implementing end know that that is not a 100% solution. Sorry.

Cynics think that suppliers simply wish to lock in customers with an extraordinarily high switching cost. All suppliers wish to have a customer for life, but I don’t take that cynicism to the extreme. It implies much better product development and coordination than is often the case.

That said, for suppliers working with standards will almost always be Plan B.

That brings us to the owner/operator or end-user community. They mostly like Plan B. For several reasons. First, this does keep the supplier on its toes knowing that competition is just around the corner. Good service, technology, and prices can keep the customer happy. Slip up, and, well….

Further, owner/operators know that they have many more needs than one supplier can fill. And they have a multitude of legacy systems that need to be integrated into the overall system-of-systems. This also requires Plan B—interoperable systems built upon standards.

This interoperable system-of-systems, however, does not preclude best-of-class technologies and solutions within the COTS product. It only requires exposing data through standards in a standard format. That would include the RDLs and Web Services Information Service Bus Model (ws-ISBM) on the OIIE diagram. The OIIE does not care about what is within the blocks of operations, maintenance, design, and other applications. Just that data can flow smoothly from system to system.

System-of-systems

Aaron Crews of Emerson wrote on LinkedIn a little about Emerson’s work in the area. “Ok now draw a vertical line through your bus and call that a corporate firewall and it gets even more complex. Not pushing the approach as the be-all, end-all, but what Emerson has been doing with the ARES asset management platform is an interesting take on the reliability side of this.”

Let’s take Aaron’s thoughts about what Emerson is doing and consider on the next post what happens if many companies are doing something similar in different applications. Putting these all together looking at things from an enterprise point of view.

by Gary Mintchell | Mar 9, 2016 | Automation, Internet of Things, Interoperability, Operations Management, Technology

I’ve been thinking deeply about the industrial internet and the greater industrial ecosystem. A friend passed along an article from ZD Net written by Simon Bisson, “There’s A Huge Void At the Heart of the Internet of Things.”

The deck of the article reads, “Closed systems do not an internet make. It’s time to change that before it’s too late.”

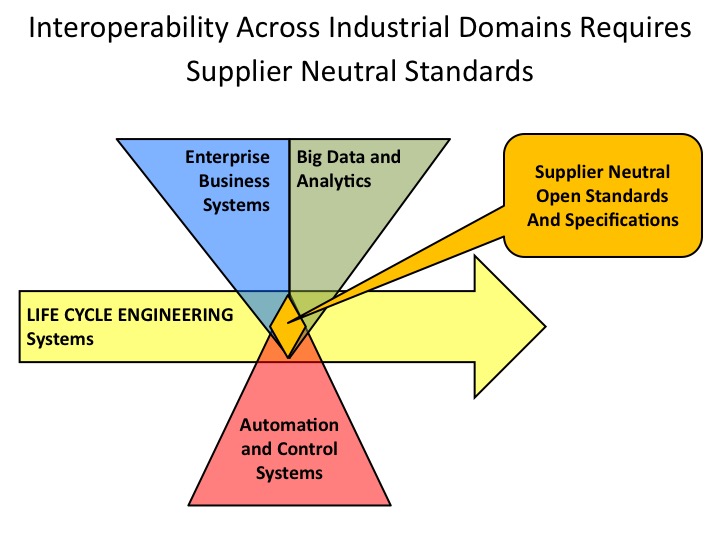

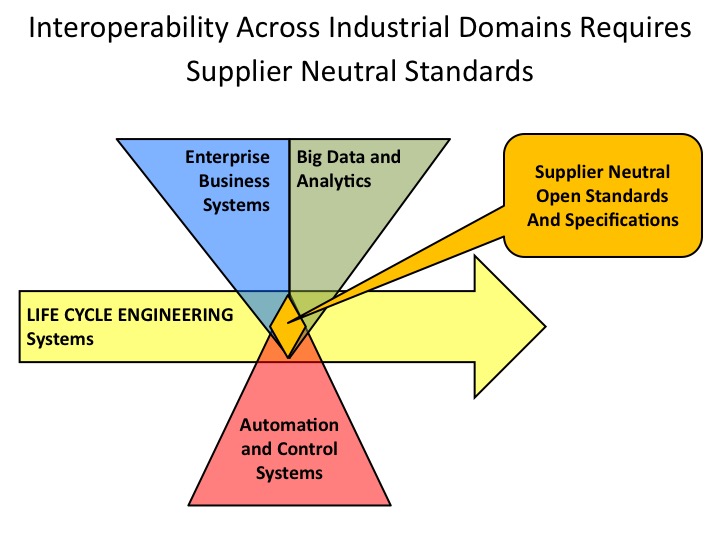

He knew I was working on some white papers for MIMOSA and the OpenO&M Initiative on interoperability of standards in the larger industrial ecosystem. In the case of what I’m writing, there exists a gap in areas covered by Enterprise Business Systems, Big Data and Analytics, Automation and Control Systems, and Life-Cycle Engineering Systems. The gap can be filled with Supplier Neutral Open Standards and Specifications.

Bisson writes, “Sensors are everywhere, but they’re solitary devices, unable to be part of a holistic web of devices that exposes the world around us, giving us the measurements we need to understand and control our environments.”

He notes a few feeble movements toward developing “communication and API standards for these devices, through standards like AllJoyn and Open Connectivity Foundation, the backbone is still missing: a service that will allow us to work with all the devices in our homes.”

Industrial Internet broadly speaking

Typical of a consumer-facing publication, he’s thinking home. I’m thinking industrial in a broad scope.

He continues, “Building that code isn’t hard, either. One of the more interesting IoT development platforms is Node-RED. It’s a semi-visual programming environment that allows you to drag and drop code modules, linking them to a range of inputs and outputs, combining multiple APIs into a node.js-based application that can run on a PC, or in the cloud, or even on devices like the Raspberry Pi.”

Bisson concludes, “The road to an interoperable Internet of Things isn’t hard to find. We just need to remember that our things are now software, and apply the lessons we’ve learnt about building secure and interoperable systems to those software things. And once we’ve got that secure, interoperable set of things, we can start to build the promised world of ubiquitous computing and ambient intelligence.”

I’d take his consumer ideas and apply them to industrial systems. The work I’m doing is to explain an industrial interoperable system-of-systems. I’m about ready to publish the first white paper which is an executive summary. I’m about half done with the longer piece that dives into much greater detail. But he is right. Much has already been invented, developed, and implemented at a certain level. We need to fill the gap now.

by Gary Mintchell | Dec 2, 2015 | Automation, Interoperability, News, Process Control, Standards, Technology

Here is an industrial automation announcement from the recent SPS IPC Drives trade fair held annually in Nuremberg, Germany. This one discusses a new open integration, some say interoperability, program based upon open standards.

This blog has now complete eight years—through three names and domains: Gary Mintchell’s Radio Weblog, Gary Mintchell’s Feed Forward, and now The Manufacturing Connection. Through these eight years one consistent theme is advocating for what I believe to be the user’s point of view—open integration.

Users have consistently (although unfortunately not always vocally) expressed the view that, while they love developing a strong partnership with preferred suppliers, they also want to be able to connect products from other suppliers as well as protect themselves by leaving an “out” in case of a problem with the current supplier.

The other position contains two points of view. Suppliers say that if they can control all the integration of parts, then they can provide a stronger and more consistent experience. Customers worry that locking themselves into one supplier will enable it to raise prices and that it will also leave them vulnerable to changes in the supplier’s business.

With that as an introduction, this announcement came my way via Endress+Hauser. That company is a strong measurement and instrumentation player as well as a valued partner of Rockwell Automation’s process business. The announcement concerns the “Open Integration Partner Program.”

I’m a little at a loss to describe exactly what this is—other than a “program.” It’s not an organization. Rather its appearance is that of a memorandum of cooperation.

The program promotes the cooperation between providers of industrial automation systems and fieldbus communication. To date, eight companies have joined the program:

AUMA Riester, HIMA Paul Hildebrandt, Honeywell Process Solutions, Mitsubishi Electric, Pepperl+Fuchs, Rockwell Automation, R. STAHL and Schneider Electric.

“By working closely with our partners, we want to make sure that a relevant selection of products can be easily combined and integrated for common target markets,” outlines Michael Ziesemer, Chief Operating Officer of Endress+Hauser. This is done by using open communication standards such as HART, PROFIBUS, FOUNDATION Fieldbus, EtherNet/IP or PROFINET and open integration standards such as FDT, EDD or FDI. Ziesemer continues: “We are open for more cooperation partners. Every market stakeholder who, like us, consistently relies on open standards is invited to join the Open Integration program.”

Reference topologies are the key

Cooperation starts with what are known as reference topologies, which are worked out jointly by the Open Integration partners. Each reference topology is tailored to the customers’ applications and the field communication technologies used in these applications. “To fill the program with life in terms of content, we are going to target specific customers who might be interested in joining us,” added Ziesemer.

Depending on industrial segment and market, the focus will be on typical requirements such as availability, redundancy or explosion protection, followed by the selection of system components and field instruments of practical relevance. This exact combination will then be tested and documented before it is published as a joint recommendation, giving customers concrete and successfully validated suggestions for automating their plant.

Ziesemer adds: “With this joint validation as part of the Open Integration, we go well beyond the established conformity and interoperability tests that we have carried out for many years with all relevant process control systems.”

by Gary Mintchell | Aug 28, 2015 | Commentary, Data Management, Interoperability, News, Operations Management

This is a story about data interoperability and integration. This is a much-needed step in the industry. I just wish that it were more standards-driven and therefore more widespread.

But we’ll take every step forward we can get.

Arena Solutions, developer of cloud-based product lifecycle management (PLM) applications, announced that its flagship product, Arena PLM, now offers real-time synchronization with Kenandy Cloud ERP, an enterprise resource planning system for midmarket and large global enterprises built on the Salesforce Platform.

With this integration, the product record can be automatically passed from Arena PLM to Kenandy at the point of change approval. This eliminates errors and accelerates access of product information in Kenandy to create a more cohesive and efficient manufacturing process.

Arena PLM and Kenandy Cloud ERP can now communicate directly with each other, enabling customers to share up-to-date product data with finance, sales and manufacturing departments to ensure accurate financial planning and support operations.

“We are excited to be partnering with Kenandy to deliver a fully cloud-based integrated PLM and ERP solution.” said Steve Chalgren, EVP of product management and chief strategy officer at Arena Solutions. “The integration between our products is simple, clean, and can be implemented quickly. Isn’t that refreshing?”

Using the integration between Arena PLM and Kenandy Cloud ERP, customers can:

- Manage the product development process of product data (items, bill of materials, manufacturer and supplier data) in a centralized Arena PLM system through the entire product lifecycle; and

- Use Kenandy to quickly plan, procure and manufacture products upon handoff of the latest product release from Arena.

Primus Power Benefits from Seamless Integration

Delivering clean-tech energy storage solutions based on advanced battery technology, Hayward, California-based Primus Power was already successfully using Arena PLM for their design and engineering activities. It was essential that their new ERP and existing PLM system integrate seamlessly.

In Primus’ fast moving, design-focused environment, an engineer can now implement a product idea or improvement in the PLM system and within minutes the new part number is generated in Kenandy automatically. Instantly, people throughout the company can find that part; there’s a pricing history for it, a supply history. “People no longer say, ‘Did we order that bracket?’ They can now actually see that it’s on order. They can find the purchase order and the promised delivery date,” said Mark Collins, senior director of operations at Primus. “So much information is now available at people’s fingertips simply because we created a part number that’s now searchable in the system.”

“Cloud solutions deliver business agility in ways that on-premise solutions just cannot,” said Rod Butters, president and chief operating officer at Kenandy. “Together Arena and Kenandy are delivering a solution that can be deployed fast and, more importantly, helps the business run fast. Even though our customers are working with our two products, their entire team sees a single, complete, real-time source of truth from product design to product delivered to bottom-line results.”

I have written about Kenandy a couple of times this year here and here.

by Gary Mintchell | Aug 10, 2015 | Automation, Internet of Things, Interoperability, News, Technology

During NI Week last week in Austin, Texas, IBM representatives discussed some news with me about a new engineering software tool the company has released – called Product Line Engineering (PLE) — designed to help manufacturers deal with the complexity of building smart, connected devices. Users of Internet of Things (IoT) products worldwide have geographic-specific needs, leading to slight variations in design across different markets. The IBM software is designed to help engineers manage the cost and effort of customizing product designs.

You may think, as I did, about IBM as an enterprise software company specializing in large, complex databases along with the Watson analytic engine. IBM is also home to Rational Software—an engineering tool used by many developers in the embedded software space. I had forgotten about the many engineering tools existing under the IBM umbrella.

They described the reason for the new release. Manufacturers traditionally manage customization needs by grouping similar designs into product lines. Products within a specific line may have up to 85% of their design in common, with the rest being variable, depending on market requirements and consumer demand and expectation. For example, a car might have a common body and suspension system, while consumers have the option of choosing interior, engine and transmission.

Product Line Engineering from IBM helps engineers specify what’s common and what’s variable within a product line, reducing data duplication and the potential for design errors. The technology supports critical engineering tasks including software development, model-based design, systems engineering, and test and quality management—helping them design complex IoT products faster, and with fewer defects. Additional highlights include:

- Helps manufacturers manage market-specific requirements: Delivered as a web-based product or managed service, the IBM software can help manufacturers become more competitive across worldwide markets by helping them manage versions of requirements across multiple domains including mechanical, electronics and software;

- Leverages the Open Services for Lifecycle Collaboration (OSLC) specification: this helps define configuration management capabilities that span tools and disciplines, including requirements management, systems engineering, modeling, and test and quality.

Organizations including Bosch, Datamato Technologies and Project CRYSTAL are leveraging new IBM PLE capabilities to transform business processes. Project CRYSTAL aims to specify product configurations that include data from multiple engineering disciplines, eliminating the need to search multiple places for the right data, and reducing the risk associated with developing complex products.

Dr. Christian El Salloum, AVL List GmbH Graz Austria, the global project coordinator for the ARTEMIS CRYSTAL project, said, “Project CRYSTAL aims to drive tool interoperability widely across four industry segments for advanced systems engineering. Version handling, configuration management and product line engineering are all extremely important capabilities for development of smart, connected products. Working with IBM and others, we are investigating the OSLC Configuration Management draft specification for addressing interoperability needs associated with mission-critical design across multi-disciplinary teams and partners.”

Rob Ekkel, manager at Philips Healthcare R&D and project leader in the EU Crystal project, noted, “Together with IBM and other partners, we are looking in the Crystal project for innovation of our high tech, safety critical medical systems. Given the pace of the market and the technology, we have to manage multiple concurrent versions and configurations of our engineering work products, not just software, but also specifications, and e.g. simulation, test and field data. Interoperability of software and systems engineering tools is essential for us, and we consider IBM as a valuable partner when it comes to OSLC based integrations of engineering tools. We are interested to explore in Crystal the Product Engineering capabilities that IBM is working on, and to extend our current Crystal experiments with e.g. Safety Risk Management with OSLC based product engineering.”

Nico Maldener, Senior Project Manager, Bosch, added, “Tool-based product line engineering helps Bosch to faster tailor its products to meet the needs of world-wide markets.”

Sachin Londhe, Managing Director, Datamato Technologies, said, “To meet its objective of delivering high-quality products and services, Datamato depends on leading tools. We expect that product line engineering and software development capabilities from IBM will help us provide our clients with a competitive advantage.”