by Gary Mintchell | Mar 24, 2016 | Automation, Internet of Things, Interoperability, Operations Management

Owner/Operators do see great benefits to standards for system-of-systems interoperability. Getting there is the problem.

Aaron Crews talked about what Emerson is doing along the lines I’ve been discussing. Tim Sowell of Schneider Electric Software hasn’t commented (that’s OK), but I’ve been looking at his blog posts and commenting for a while. He has been addressing some of these issues from another point of view. I’m interested in what the rest of the technology development community is working on.

Let’s take the idea of what various suppliers are working on and raise our point of view to that of an enterprise solutions architect. These professionals are concerned about what individual suppliers are doing, of course, but they also must make all of this work together for the common good.

Up until now, they must depend upon expensive integrators to piece all the parts together. So maybe they find custom ways to tie together Intergraph, SAP, Maximo, Emerson (just to pull a few suppliers out of the air) so that appropriate solutions can be devised for specific problems. And maybe they are still paying high rates for the integration while the integrator hires low cost engineering from India or other countries.

When the owner/operators see their benefits and decide to act, then useful interoperability standards can be written, approved, and implemented in such a way to benefit all parties.

Standards Driving Products

I think back to the early OMAC work of the late 90s. Here a few large end users wanted to drive down the cost of machine controllers that they felt were higher than the value they were getting. They wanted to develop a specification for a generic, commodity PLC. No supplier was interested. (Are we surprised?) In the end the customers didn’t drive enough value proposition to drive a new controller. (I was told behind the scenes that they did succeed in getting the major supplier to drop prices and everything else was forgotten.)

Another OMAC drive for an industry standard was PackML—a markup language for packaging machines. This one was closer to working. It did not try to dictate the inside of the control, but it merely provided an industry standard way of interfacing with the machine. That part was successful. However, two problems ensued. A major consumer products company put it in the spec, but that did not guarantee that purchasing would open up bidding to other suppliers. Smaller control companies hoped that following the spec would level the playing field and allow them to compete against the majors. In the end, nothing much changed—except machine interface did become more standardized from machine to machine greatly aiding training and workforce deployment problems for CPG manufacturers.

These experiences make me pay close attention to the ExxonMobil / Lockheed Martin quest for a “commodity” DCS system. Will this idea work this time? Will there be a standard specification for a commodity DCS?

Owner/Operator Driven Interoperability

I say all that to address the real problem—buy in by owner/operators and end users. if they drive compliance to the standard, then change will happen.

Satish commented again yesterday, “I could see active participation of consultants and vendors than that of the real end users. Being a voluntary activity, my personal reading is standards are more influenced by organizations who expect to reap benefit out of it by investing on them. Adoption of standard could fine tune it better and match it to the real use but it takes much longer! The challenge is : How to ensure end user problems are in the top of the list for developing / updating a standard?”

He is exactly right. The OpenO&M and MIMOSA work that I have been referencing is build upon several ISO standard, but, importantly, has been pushed by several large owner/operators who project immense savings (millions of dollars) by implementing the ecosystem. I have published an executive summary white paper of the project that you can download. A more detailed view is in process.

I like Jake Brodsky’s comment, “I often refer to IoT as ‘SCADA in drag.’ ” Check out his entire comment on yesterday’s post. I’d be most interested in following up on his comment about mistakes SCADA people made and learned from that the IoT people could learn. That would be interesting.

by Gary Mintchell | Mar 23, 2016 | Automation, Internet of Things, Interoperability, Operations Management

A couple of people commented on my protocol wars post I published yesterday. I’ll address a couple of comments and then push on toward some further enterprise level conclusions.

Satish wrote: “Nice summary Gary! Standardization is a double edged sword for vendors and always there is a cautious approach. You know what happened with Real time Ethernet, Field bus & ISA 100 standards. It will take its time to mature when customers demand it and vendors are happy with the ROI. I could see how vendors make money in integration through OPC by supporting the standard but adding cost when it comes to usage of it (fine letter prints?). Probably when automation system becomes COTS system it will be a drastic change – XOM’s project with Lockheed Martin is aimed at it, am not sure!”

Satish pointed out a couple of sore spots regarding standards. Actually standards sometimes get a bad rap. Sometime they deserve it. I don’t care to get deeply into any of them, but, for example, the wireless sensor network process was one of the worst examples in the past 20 years. Eventually the industry standard WirelessHART dominated simply due to market forces. From a technology point of view, there just wasn’t that great of a difference. We can think of others. Sometimes it is simply obfuscation on the part of one vendor or another.

However, he is correct talking about the tension of standards and suppliers. Suppliers think that they can provide a better end-to-end solution by keeping everything within their sandpile. Sometimes they can. All of us who have been on the implementing end know that that is not a 100% solution. Sorry.

Cynics think that suppliers simply wish to lock in customers with an extraordinarily high switching cost. All suppliers wish to have a customer for life, but I don’t take that cynicism to the extreme. It implies much better product development and coordination than is often the case.

That said, for suppliers working with standards will almost always be Plan B.

That brings us to the owner/operator or end-user community. They mostly like Plan B. For several reasons. First, this does keep the supplier on its toes knowing that competition is just around the corner. Good service, technology, and prices can keep the customer happy. Slip up, and, well….

Further, owner/operators know that they have many more needs than one supplier can fill. And they have a multitude of legacy systems that need to be integrated into the overall system-of-systems. This also requires Plan B—interoperable systems built upon standards.

This interoperable system-of-systems, however, does not preclude best-of-class technologies and solutions within the COTS product. It only requires exposing data through standards in a standard format. That would include the RDLs and Web Services Information Service Bus Model (ws-ISBM) on the OIIE diagram. The OIIE does not care about what is within the blocks of operations, maintenance, design, and other applications. Just that data can flow smoothly from system to system.

System-of-systems

Aaron Crews of Emerson wrote on LinkedIn a little about Emerson’s work in the area. “Ok now draw a vertical line through your bus and call that a corporate firewall and it gets even more complex. Not pushing the approach as the be-all, end-all, but what Emerson has been doing with the ARES asset management platform is an interesting take on the reliability side of this.”

Let’s take Aaron’s thoughts about what Emerson is doing and consider on the next post what happens if many companies are doing something similar in different applications. Putting these all together looking at things from an enterprise point of view.

by Gary Mintchell | Mar 22, 2016 | Automation, Internet of Things, Operations Management

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

Well, I made it sound so simple, didn’t I? I mean, just run a wire around the control system and move data in a non-hierarchical manner to The Cloud. Voila. The Industrial Internet of Things. Devices serving data on the Internet.

Turns out it’s not that simple, is it?

First off, “The Cloud” is actually a data repository (or lots of them) located on a server somewhere and probably within an application of some sort. These applications can be siloed like they mostly are now. Or maybe they share data in a federated manner—the trend of the future.

To accomplish that federation will require standardized ways of describing devices, data, and the metadata. I’ll have more to say about that later relative to some white papers I’m writing for MIMOSA and The OpenO&M Initiative.

Typically data is carried by protocols. OPC (and its latest iteration OPC UA) has been popular in control to HMI applications—and more. Other Internet of Things protocols include XMPP, MQTT, AMQP. Maybe some use JSON. You may have heard of SOAP and RESTful.

Will we live with a multiplicity of protocols? Can we? Will some dominant supplier force a standard?

Check out these recent blogs and articles:

GE Blog – Industrial Internet Protocol Wars

FastCompany, Why the Internet of Things Might Never Speak A Common Language

Inductive Automation Webinar — MQTT the only control protocol you need

OPC – Reshape the Automation Pyramid (is OPC UA all you need?)

Interoperability Among Protocols

What we need is something in the middle that wraps each of the messages in a standard way and delivers to the application or Enterprise Service Bus. Such a technology is described by the OpenO&M Information Service Bus Model that is the core component of the Open Industrial Interoperability Ecosystem (OIIE) that I introduced in the last post. The ISBM is actually not a bus, per se, but a set of APIs based on Web Services. It is also described in ISA 95 Part 6 as Message Service Model (MSM).

The MSM is described in a few points by Dennis Brandl:

- Defines a standard method for interfacing with different Enterprise Service Buses

- Enables sending and receiving messages between applications using a common interface

- Reduces the number of interfaces that must be supported in an integration project

Here is a graphic representation Brandl has developed:

These are simple Web Services designed to remove complexity from the transaction at this stage of communication.

by Gary Mintchell | Mar 16, 2016 | Automation, Operations Management

Mike Boudreaux, director of performance and reliability monitoring for Emerson Process Management, has published an important article in Plant Services magazine discussing some limitations of the Purdue Model incorporating the Industrial Internet of Things. There are many more applications (safety, environmental, energy, reliability) that can be solved outside the control system. They just are not described within the current model.

Interestingly, about the same time I saw a blog post at Emerson Process Experts quoting Emerson Process Chief Strategic Officer Peter Zornio discussing the same topic.

I’ve been thinking about this for years. Mike’s article (which I recommend you read–now) brought the thoughts into focus.

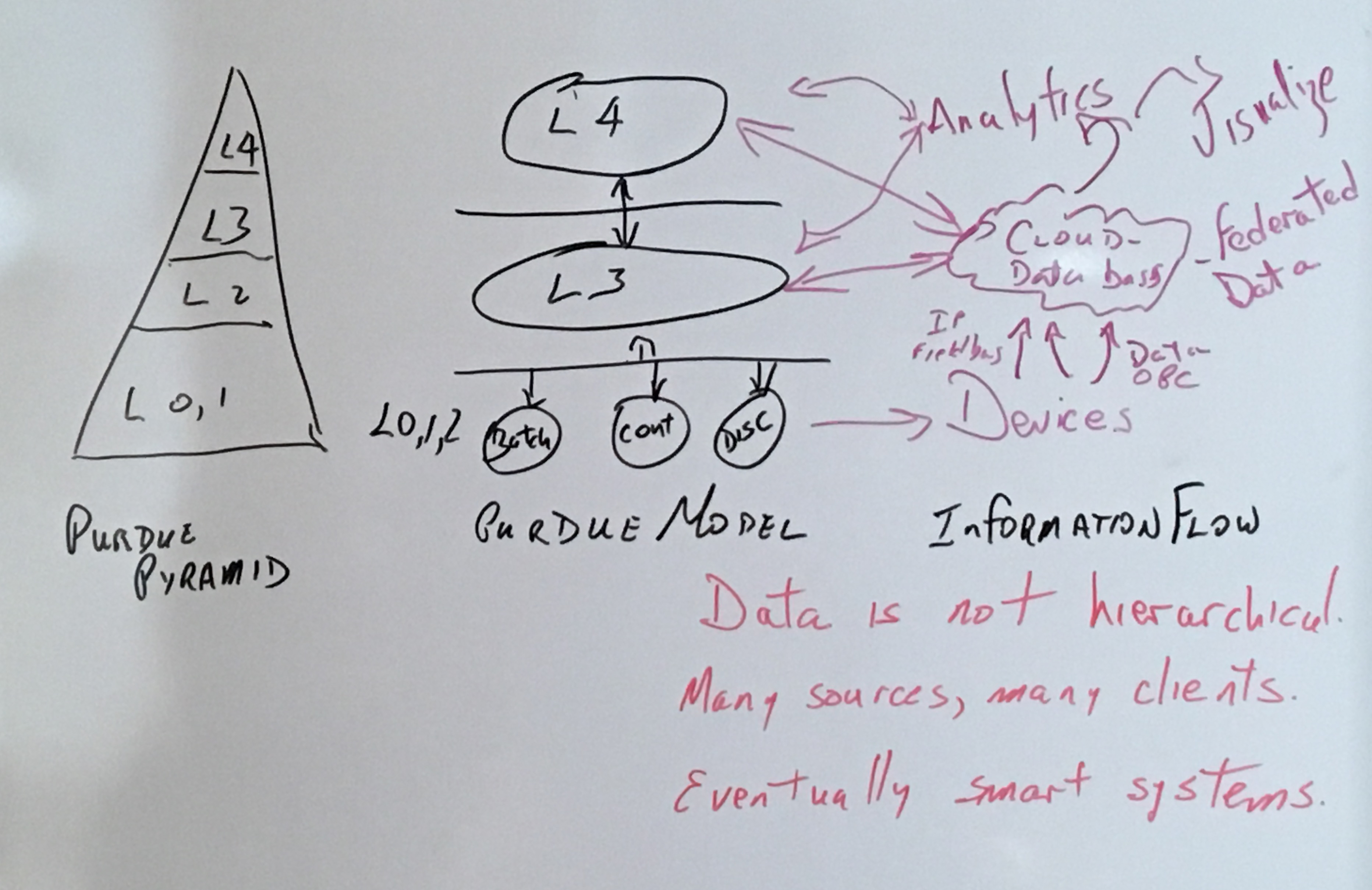

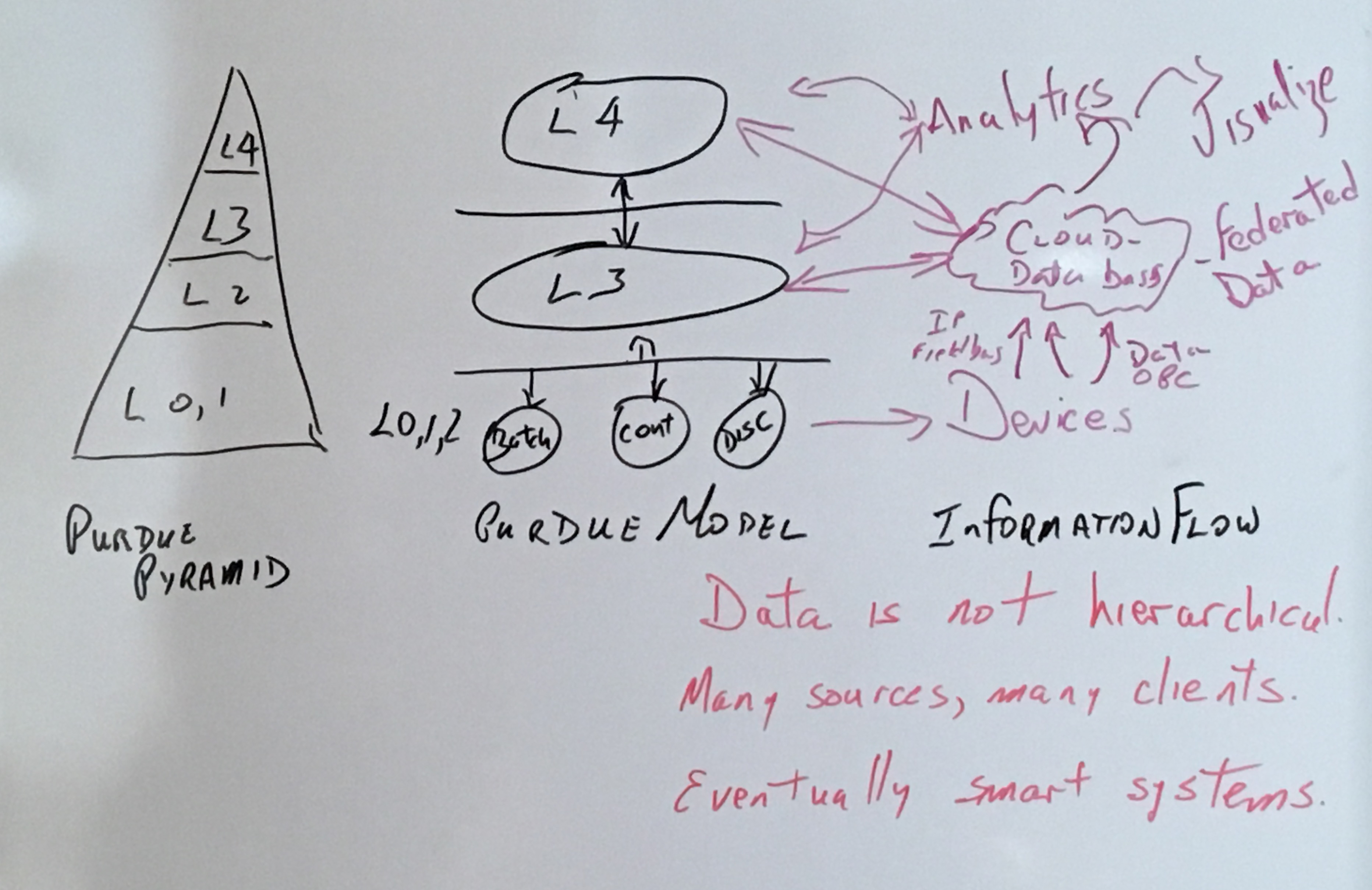

Purdue Enterprise Reference Architecture Model

The Purdue Enterprise Reference Architecture Model has guided manufacturing enterprises and their suppliers for 25 years. The model is usually represented by a pyramid shape. I’ve used a diagram from Wikipedia that just uses circles and arrows.

This model describes various “levels” of applications and controls in a manufacturing enterprise. It describes components from the physical levels of the plant (Level 0) through control equipment and strategies (Level 2).

Level 3 describes the manufacturing control level. These are applications that “control” operations. This level once was labelled Manufacturing Execution Systems (MES). The trade association for this level–MESA International–now labels this “Manufacturing Enterprise Solutions” to maintain the MES part but describe an increased role for applications at this level. The ISA95 Standard for Enterprise Control labels this level as Manufacturing Operations Management. It is quite common now to hear the phrase Operations Management referring to the various applications that inhabit this level. This is also the domain of Manufacturing IT professionals.

Level 4 is the domain of Enterprise Business Planning, or Enterprise Resource Planning (ERP) systems. It’s the domain of corporate IT.

Hierarchical Data Flow

The Purdue Model also describes a data flow model. That may or may not have been the idea, but it does. The assumption of the model that sensors and other data-serving field devices are connected to the control system. The control system serves the dual purpose of controlling processes or machines as well as serving massaged data to the operations management level of applications. In turn, level 3 applications feed information to the enterprise business system level.

Alternative Data Flow

What Mike is describing, and I’ve tried sketching at various times, is a parallel diagram that shows data flow outside the control system. He rightly observes that the Industrial Internet of Things greatly expands the Purdue Model.

So I went to the white board. Here’s a sketch of some things I’ve been thinking about. What do you think? Steal it if you want. Or incorporate it into your own ideas. I’m not an analyst that gets six-figure contracts to think up this stuff. If you want to hire me to help you expand your business around the ideas, well that would be good.

I have some basic assumptions at this time:

- Data is not hierarchical

- Data has many sources and many clients

- Eventually we can expect smart systems automatically moving data and initiating applications

Perhaps 25 years ago we could consider a hierarchical data structure. Today we have moved to a federated data structure. There are data repositories all over the enterprise. We just need a standardized method of publish/subscribe so that the app that needs data can find it–and trust it.

Now some have written that technology means the end of Level 3. Of course it doesn’t. Enterprises still need all that work done. What it does mean the end of is silos of data behind unbreachable walls. It also means that there are many opportunities for new apps and connections. Once we blow away the static nature of the model, the way to innovation is cleared.

OpenO&M Model

Perhaps the future will get closer to a model that I’m writing a series of white papers to describe. Growing from the OpenO&M Initiative, the Open Industrial Interoperability Ecosystem model looks interesting. I’ve just about finished an executive summary white paper that I’ll link to my Webpage. The longer description white paper is in process. More on that later. And look for an article in Uptime magazine.

by Gary Mintchell | Mar 15, 2016 | Automation, Internet of Things, Podcast

Manufacturing strategies–my 146th podcast (yes, I don’t get around to them regularly). I look at the discussions around Industrial Internet of Things and Industry 4.0 likening them to strategies.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.