by Gary Mintchell | Mar 24, 2016 | Automation, Internet of Things, Interoperability, Operations Management

Owner/Operators do see great benefits to standards for system-of-systems interoperability. Getting there is the problem.

Aaron Crews talked about what Emerson is doing along the lines I’ve been discussing. Tim Sowell of Schneider Electric Software hasn’t commented (that’s OK), but I’ve been looking at his blog posts and commenting for a while. He has been addressing some of these issues from another point of view. I’m interested in what the rest of the technology development community is working on.

Let’s take the idea of what various suppliers are working on and raise our point of view to that of an enterprise solutions architect. These professionals are concerned about what individual suppliers are doing, of course, but they also must make all of this work together for the common good.

Up until now, they must depend upon expensive integrators to piece all the parts together. So maybe they find custom ways to tie together Intergraph, SAP, Maximo, Emerson (just to pull a few suppliers out of the air) so that appropriate solutions can be devised for specific problems. And maybe they are still paying high rates for the integration while the integrator hires low cost engineering from India or other countries.

When the owner/operators see their benefits and decide to act, then useful interoperability standards can be written, approved, and implemented in such a way to benefit all parties.

Standards Driving Products

I think back to the early OMAC work of the late 90s. Here a few large end users wanted to drive down the cost of machine controllers that they felt were higher than the value they were getting. They wanted to develop a specification for a generic, commodity PLC. No supplier was interested. (Are we surprised?) In the end the customers didn’t drive enough value proposition to drive a new controller. (I was told behind the scenes that they did succeed in getting the major supplier to drop prices and everything else was forgotten.)

Another OMAC drive for an industry standard was PackML—a markup language for packaging machines. This one was closer to working. It did not try to dictate the inside of the control, but it merely provided an industry standard way of interfacing with the machine. That part was successful. However, two problems ensued. A major consumer products company put it in the spec, but that did not guarantee that purchasing would open up bidding to other suppliers. Smaller control companies hoped that following the spec would level the playing field and allow them to compete against the majors. In the end, nothing much changed—except machine interface did become more standardized from machine to machine greatly aiding training and workforce deployment problems for CPG manufacturers.

These experiences make me pay close attention to the ExxonMobil / Lockheed Martin quest for a “commodity” DCS system. Will this idea work this time? Will there be a standard specification for a commodity DCS?

Owner/Operator Driven Interoperability

I say all that to address the real problem—buy in by owner/operators and end users. if they drive compliance to the standard, then change will happen.

Satish commented again yesterday, “I could see active participation of consultants and vendors than that of the real end users. Being a voluntary activity, my personal reading is standards are more influenced by organizations who expect to reap benefit out of it by investing on them. Adoption of standard could fine tune it better and match it to the real use but it takes much longer! The challenge is : How to ensure end user problems are in the top of the list for developing / updating a standard?”

He is exactly right. The OpenO&M and MIMOSA work that I have been referencing is build upon several ISO standard, but, importantly, has been pushed by several large owner/operators who project immense savings (millions of dollars) by implementing the ecosystem. I have published an executive summary white paper of the project that you can download. A more detailed view is in process.

I like Jake Brodsky’s comment, “I often refer to IoT as ‘SCADA in drag.’ ” Check out his entire comment on yesterday’s post. I’d be most interested in following up on his comment about mistakes SCADA people made and learned from that the IoT people could learn. That would be interesting.

by Gary Mintchell | Mar 22, 2016 | Automation, Internet of Things, Operations Management

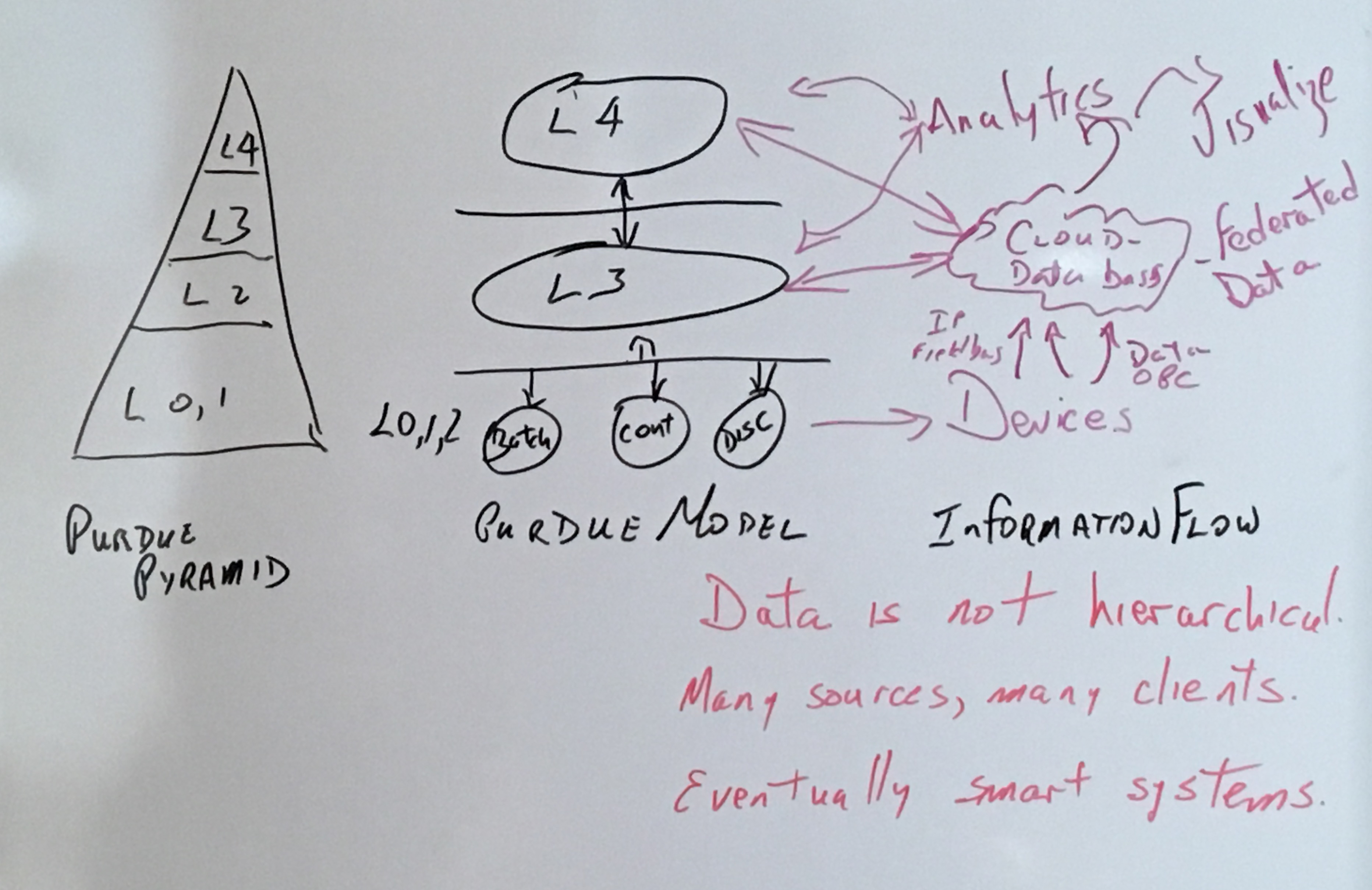

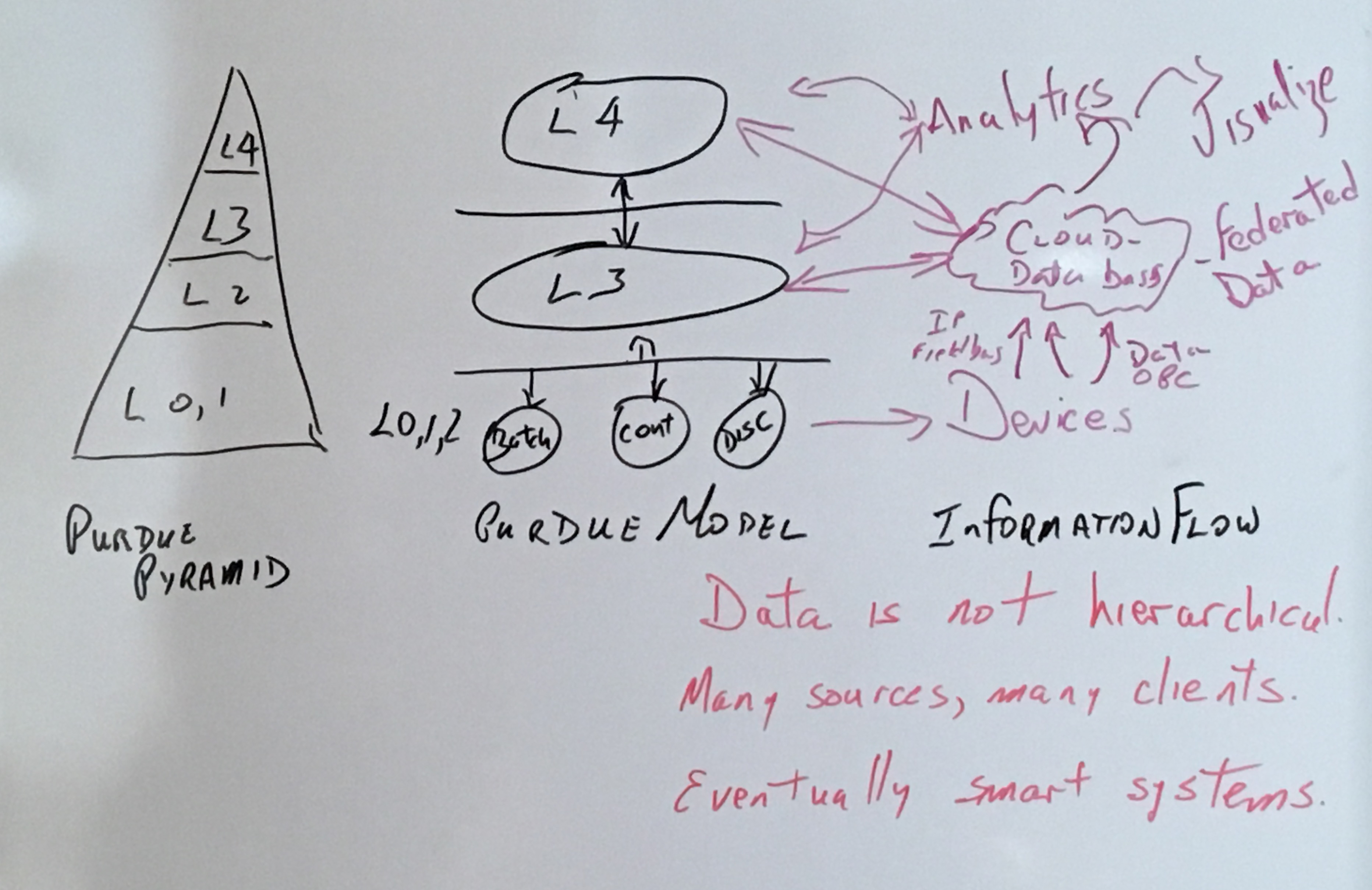

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

Well, I made it sound so simple, didn’t I? I mean, just run a wire around the control system and move data in a non-hierarchical manner to The Cloud. Voila. The Industrial Internet of Things. Devices serving data on the Internet.

Turns out it’s not that simple, is it?

First off, “The Cloud” is actually a data repository (or lots of them) located on a server somewhere and probably within an application of some sort. These applications can be siloed like they mostly are now. Or maybe they share data in a federated manner—the trend of the future.

To accomplish that federation will require standardized ways of describing devices, data, and the metadata. I’ll have more to say about that later relative to some white papers I’m writing for MIMOSA and The OpenO&M Initiative.

Typically data is carried by protocols. OPC (and its latest iteration OPC UA) has been popular in control to HMI applications—and more. Other Internet of Things protocols include XMPP, MQTT, AMQP. Maybe some use JSON. You may have heard of SOAP and RESTful.

Will we live with a multiplicity of protocols? Can we? Will some dominant supplier force a standard?

Check out these recent blogs and articles:

GE Blog – Industrial Internet Protocol Wars

FastCompany, Why the Internet of Things Might Never Speak A Common Language

Inductive Automation Webinar — MQTT the only control protocol you need

OPC – Reshape the Automation Pyramid (is OPC UA all you need?)

Interoperability Among Protocols

What we need is something in the middle that wraps each of the messages in a standard way and delivers to the application or Enterprise Service Bus. Such a technology is described by the OpenO&M Information Service Bus Model that is the core component of the Open Industrial Interoperability Ecosystem (OIIE) that I introduced in the last post. The ISBM is actually not a bus, per se, but a set of APIs based on Web Services. It is also described in ISA 95 Part 6 as Message Service Model (MSM).

The MSM is described in a few points by Dennis Brandl:

- Defines a standard method for interfacing with different Enterprise Service Buses

- Enables sending and receiving messages between applications using a common interface

- Reduces the number of interfaces that must be supported in an integration project

Here is a graphic representation Brandl has developed:

These are simple Web Services designed to remove complexity from the transaction at this stage of communication.

by Gary Mintchell | Jan 26, 2015 | Automation, Commentary, Data Management, Interoperability, Leadership, Manufacturing IT, News, Operations Management, Organizations, Technology, Workforce

[Updated: 1/28/15]

Last week I attended the board meeting of the Smart Manufacturing Leadership Coalition. Sometimes I’m an idealist working with organizations that I think have the potential to make things better for engineers, managers, and manufacturers in general. I derive no income from them, but sometimes you need to give back to the cause. SMLC is one of those organizations. MESA, OMAC, ISA, CSIA, and MIMOSA are other organizations that I’ve either given a platform to or to whom I have dedicated many hours to help get their message out.

In the area of weird coincidence, just as I was preparing to leave the SMLC meeting there came across my computer a press release from an analyst firm called IDC IDC Manufacturing Insights also about smart manufacturing. This British firm that is establishing an American foothold first came to my attention several years ago with a research report on adoption of fieldbuses.

The model is the “Why, What, Who, and How of Smart Manufacturing.” See the image for more information. I find this model interesting. As a student of philosophy, I’m intrigued by the four-part Yin-Yang motif. But as a manufacturing model, I find it somewhat lacking.

IDC insight

According to Robert Parker, group vice president at IDC Manufacturing Insights, “Smart manufacturing programs can deliver financial benefits that are tangible and auditable. More importantly, smart manufacturing transitions the production function from one that is capacity centric to one that is capability centric — able to serve global markets and discerning customers.” A new IDC Manufacturing Insights report, IDC PlanScape: Smart Manufacturing – The Path to the Future Factory (Doc #MI253612), uses the IDC PlanScape methodology to provide the framework for a business strategy related to investment in smart manufacturing.

Parker continues, “Smart manufacturing programs can deliver financial benefits that are tangible and auditable. More importantly, smart manufacturing transitions the production function from one that is capacity centric to one that is capability centric — able to serve global markets and discerning customers.”

The press release adds, “At its core, smart manufacturing is the convergence of data acquisition, analytics, and automated control to improve the overall effectiveness of a company’s factory network.”

Smart manufacturing

This “smart” term is getting thrown around quite a bit. A group of people from academia, manufacturing, and suppliers began discussing “smart manufacturing” in 2010 and incorporated the “Smart Manufacturing Leadership Coalition” in 2012. I attended a meeting for the first time in early 2013.

Early on, SMLC agreed that “the next step change in U.S. manufacturing productivity would come from a broader use of modeling and simulation technology throughout the manufacturing process”.

Another group, this one from Germany with the sponsorship of the German Federal government, is known as Industry 4.0, or the 4th generation of industry. At times its spokespeople discuss the “smart factory.” This group is also investigating the use of modeling and simulation. However, the two groups take somewhat different paths to, hopefully, a similar destination—more effective and profitable manufacturing systems.

Key findings from IDC:

- Use the overall equipment effectiveness (OEE) equation to understand the potential benefits, and tie those benefits to financial metrics such as revenue, costs, and asset levels to justify investment.

- Broaden the OEE beyond individual pieces of equipment to look at the overall impact on product lines, factories, and the whole network of production facilities.

- Technology investment can be separated into capabilities related to connectivity, data acquisition, analytics, and actuation.

- A unifying architecture is required to bring the technology pieces together.

- Move toward an integrated governance model that incorporates both operation technology (OT) and information technology (IT) resources.

- Choose an investment cadence based on the level of executive support for smart manufacturing.

Gary’s view

I’ve told you my affiliations, although I am not a spokesman for any of them. Any views are my own.

So, here is my take on this report. This is not meant to blast IDC. They have developed a model that they can take to clients to discuss manufacturing strategies. I’m sure that some good would come out of that—at least if executives at the company take the direction seriously and actually back good manufacturing. However, the ideas started my thought process.

Following are some ideas that I’ve worked with and developed over the past few years.

- To begin (picky point), I wish they had picked another name in order to avoid confusion over what “smart manufacturing” is.

- While there are a lot of good points within their model, I’d suggest looking beyond just OEE. That is a nice metric, but it is often too open to vagaries in definition and data collection at the source.

- Many companies, indeed, are working toward that IT/OT convergence—and much has been done. Cisco, for example, partners with many automation suppliers.

- SMLC is working on a comprehensive framework and platform (also check out the Smart Manufacturing blog). Meanwhile, I’d also reference the work of MIMOSA (OpenO&M and the Oil & Gas Interoperability Pilot see here and here).

- I’d suggest that IDC take a look into modeling, simulation, and cyber-physical systems. There is also much work being done on “systems of systems” that bring in standards and systems that already exist to a higher order system.

I have not built a model, but I’d look carefully into dataflows and workflows. Can we use standards that already exist to move data from design to operations and maintenance? Can we define workflows—even going outside the plant into the supply chain? Several companies are doing some really good work on analytics and visualization that must be incorporated.

The future looks to be comprised of building models from the immense amounts of data we’re collecting and then simulating scenarios before applying new strategies. Then iterating. So, I’d propose companies thinking about their larger processes (ISA 95 can be a great start) and start building.

These thoughts are a main theme of this blog. Look for more developments in future posts.

by Gary Mintchell | Dec 11, 2014 | Automation, Interoperability, News, Operations Management, Technology

I started another Website called Physical Asset Lifecycle last year to discuss interoperability.

Two things happened. The reason to have a separate Website evaporated into the vacuum of failed business ideas. I also ran out of time to maintain yet another Website.

So, I will be reposting the work I did there and then building upon that work as an Interoperability Series.

There are key technologies and thinking when it comes to interoperability. The foundation is that we want to break silos of people and technologies so that applications can interoperate and make life easier for operations, maintenance and engineering. This will also improve the efficiency and effectiveness of operations. Much of the work so far is led by MIMOSA.

Interoperability demo

Two years ago, the foundation came together in a pilot demo at the ISA Automation Week conference of 2012. Here is the report from then.

After years of preparatory work, the OpenO&M Initiative participants organized a demonstration pilot project of information interoperability run like a real project building a debutanizer. It demonstrated the full lifecycle of the plant including all the facets of plant from design through construction to operations and management. The demonstration was held two days at the ISA Automation Week Sept. 25-26, 2012 in Orlando.

A panel of some of the people who worked on this project presented their work and showed live demonstrations.

One of the most important advances in the project was that now the three major design software suppliers–Aveva, Bentley, and Intergraph were all involved with enabling export of design data to a standard interface.

EPC for the project was Worley Parsons. Cormac Ryan, manager, Engineering Data Management, Americas, explained the development of the P&IDs using Intergraph’s Smart Plant P&ID generator. It produced a traditional P&ID. Not only a diagram, it is a database-driven tool containing lots of reports and data. The data was published in ISO 15926 format and made available to the rest of the team.

Jim Klein, Industry Solutions Consultant from Aveva, used a schema similar to the Intergraph one. It acted as a second EPC duplicating the data with the object-based database behind the drawing. It can store and link to an engineering database that contains much more data. For example, clicking on a pump diagram can show specifications and other important design information. This data was published out to instantiate the object in a maintenance management system or to create new data as it gets revised during the engineering process. Information can communicate to a “MIMOSA cloud” server.

George Grossmann, Ph.D., Research Fellow, Advanced Computing Research Centre, the University of South Australia, explained a transform engine using Bentley Open Plant received in 2 formats–owl and ecxml. These data go to iniSA 15926 transform engine. This engine takes input from all three suppliers, exports in ISO 15926 then to MIMOSA standard exports in CCOM XML.

Next up Ken Bever, with Assetricity and also CTO of MIMOSA discussed the transform from CCOM XML to the Assetricity iomog register, assuring that information was mapped to the asset and then sent to IBM’s IIC application in a standardized way. The information was then sent to OSIsoft PI historian. From PI, data is then accessible to maintenance management and operations management applications. All data references back to the ISO 15926 ontology.

Bruce Hyre, from IBM, explained how the IIC application is a standards-based platform that federates data and provides analytics. It takes CCOM, feeds it into a model server, which then provisions tags in OSI PI server. His demonstration showed the actual live P&ID from the EPC. He added, “But our focus is on the data supporting that P&ID–the tag list/model tree. You can subscribe to a tag, see information from the upstream systems.” Therefore the demonstration showed that data have gone end-to-end from design to the PI server to provision the tags with the live data from the design. 1092 tags were provisioned in this demonstration.

A video of the presentation can be found on the MIMOSA Website.

Program manager is Alan Johnston. Contact him for more information or to lend your expertise to the effort.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.

I’ve spent way too much time on the phone and on GoToMeeting over the past several days. So I let the last post on the hierarchy of the Purdue Model sit and ferment. Thanks for the comments.